In this tutorial, we will go through what is NVMe and how this new storage works. For any growing business company, there is a perpetual increase in data. Hence, they need to keep thinking about how data will be captured, stored, accessed, and transformed. Performance and endurance of data at a large scale is a tough job.

Today in both consumer apps and businesses, users expect a fast response. Even various applications become complex and resource-dependent. NVMe comes up with an easy solution for such organizations. NVMe delivers the highest throughput for all workloads of an organization.

It greatly impacts businesses and how they deal with data, particularly those dealing with real-time analytics. In this blog, I will explain what NVMe is and how its storage architecture works. For all those who are curious, here is a read for you.

What is NMVe

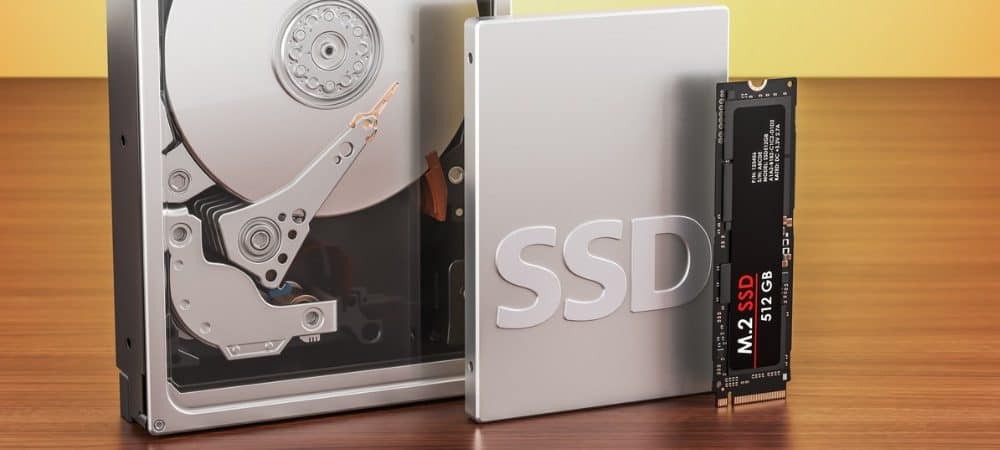

NVMe is an abbreviation for Non-Volatile Memory Express and is a protocol for accessing high-speed storage media. It is storage access and transport protocol for flash and all next-generation solid-state drives released in 2011.

It accesses a flash drive via a PCI Express (PCIe) bus that supports thousands of parallel command queues compared to a single command queue used in traditional all-flash architectures. Hence it delivers a higher-bandwidth, low-latency user experience than other hard disks. NVMe transports data over different media stores in NAND flash and a highly scalable storage protocol that connects the host to the memory subsystem.

The protocol is comparatively new, specifically for non-volatile memory media like NAND, persistent memory directly connected to the CPU via the PCIe interface. Built on PCIe lanes, this protocol offers a transfer speed two times faster than the SATA interface.

For a deeper understanding of the benefits and considerations when choosing NVMe storage, check out our NVMe SSD Buying Guide to make an informed decision.

NVMe History

The first flash-based SSDs leveraged legacy SATA/ SAS physical interfaces and protocols to minimize changes in existing hard-drive-based enterprise storage systems. However, none of these storage devices was designed for high-speed storage device media like NAND and versatile memory.

Because of interface speed, the performance of new storage media, and proximity to the central processing unit, PCI Express (PCIe) was the next-level logical storage interface. PCI slots directly connect to the central processing unit, providing memory-like access and running a very efficient software stack.

Though early PCIe interface SSDs neither match enterprise features nor industry standards. They just leveraged proprietary firmware. It hence was a challenge for system scaling for many reasons, for example:

- Running and managing firmware for a device.

- Firmware incompatibilities with different system soft wares.

- It didn't always make optimal use of lanes and CPU proximity.

- It lacked several features for enterprise workloads.

NVMe specifications were formed to address all these issues. NVMe protocol capitalizes on parallel and low latency data paths to the underlying data. This offers a great advantage compared to serial advanced technology attachment and statistical analysis systems protocols.

It accelerates existing applications that require higher performance and enables new applications for real-time data processing in the data centre and at the edge.

For a deeper understanding of the differences between these two types of storage, check out our comparison of NVMe vs. SSD.

NVMe Architecture

NVMe is a scalable protocol and has been specified for efficient data transport over PCI for storage in NAND flash drives. It has been primarily deployed on PCIe solid-state drives today. It uses a set of a minimum of 13 commands as listed below:

Admin Commands

Only ten admin commands are required for NVMe, and these are as follows:

- Create Input/ output submission queue

- Delete Input/ output submission queue

- Create Input/ output completion queue

- Delete Input/ output completion queue

- Get log page

- Identity

- Abort

- Set features

- Get features

- Asynchronous Event requests

Optional commands:

- Firmware activate (optional)

- Firmware image download (optional)

- Format NVM (Optional)

- Security send (Optional)

- Security receive (Optional)

Input-Output Commands

- Read

- Write

- Flush

- Write uncorrectable (Optional)

- Compare (Optional)

- Dataset Management (Optional)

- Write zeros (Optional)

- Reservation register (Optional)

- Reservation report (Optional)

- Reservation acquire (Optional)

- Reservation release (Optional)

To optimise data storage and retrieval, it can use up to 64K queues for parallel operation and on, with each queue having up to 64K entries. In comparison, Legacy, SAS, and SATA can support only a single queue, with each having 254 & 32 entries, respectively. It has a submission and completion queue in the host memory.

Host software places the command in the submission queue, and the NVMe controller places command completions into an associated completion queue. Multiple submission queues can report submissions on a single completion queue depending upon the controller supporting the arbitration with different priorities.

Message- signalled interrupts extended, scatter/gather IOs, minimizing CPU overhead on data transfers, and interrupt steering is also supported in NVMe. This architecture allows applications to simultaneously start, execute, and finish multiple I/O requests, leading to higher efficiency and greater speeds.

End-to-end data protection (compatible with T10 DIF and DIX standards), enhanced error reporting, and autonomous power state transitions for clients are optional.

I have come up with a very simplified view of the communication between the host and NVMe controller to help you understand the communication better; the underlying media is the most efficient way to minimize latencies and significantly lowers overheads.

- The host writes Input/ output Command Queues, and the doorbell registers it with Input/ Output Commands Ready Signal.

- NVMe controller then takes the I/O Command Queues, executes them, and sends Input/ Output Completion Queues. Input/ Output Completion Queues are followed by an interrupt to the host.

- The host then records Input/ Output Completion Queues and clears the door register with Input/ Output Commands Completion Signal.

The NVMe protocol progressed from version 1.0 to 1.2 today. While version 1.0 featured end-to-end protection, queuing, and security, it was enhanced with autonomous powers transition and multipath input/ output, including namespace sharing and reservations in version 1.1.

Features like atomicity enhancements, live firmware updates, namespace management, host and controller memory buffer, temperature, and pass-through supports were included in version 1.2. NVM, subsystem statistics sanitize command, streaming, and attributes as part of the NVM version 1.2.

The NVMe management interface standardizes out-of-band management independent of the physical transport. It discovers, configures NVMe deceives, and maps the management interface to one or more out-of-band physical interfaces, for example, I2C or PCIe VDM.

Benefits of NVMe for Data Storage

It brings legacy over the previous protocols and has the following advantages:

- Since it saves a lot of time, it is a big hit in data enterprises.

- It does not just provide solid-state storage, but also its multicore CPUs and gigabytes of memory help more than you think. So, it is better than mechanical hard disk drives.

- NVMe includes a host memory buffer for its non-critical data structures, allowing DRAM-less controllers to use the system's memory. This is well suited to the client and mobile NVMe controllers.

- It supports parallelism in multiple cores of the system while supporting non-uniform memory architecture (NUMA).

- Lockless command submission and completion bring more efficiency to protocol. Also, Commands use simple PCI express register writes to add more flexibility in issuing 64-bit commands.

- NVMe provides controller memory buffer features that allow hosts to prepare commands in controller memory. Hence, the controller does not need to fetch data from PCIe reads.

- Its storage also takes streamlined command sets to parse and manipulate the data efficiently. NVMe is focused on passing memory blocks versus SSI commands that provide more latency than other protocols.

To understand how NVMe technology impacts storage solutions, you can explore the broader concept of storage servers in our article on What Is a Storage Server.

NVMe Use Cases

- NVMe storage is especially used in business scenarios because, in business, every mini-second is counted.

- It is helpful in Real-time customer interactions such as finance and software sales agents.

- It is also used for artificial intelligence, machine learning, and advanced analytics apps.

- To run more iterations in less time, it uses DevOps.

NVMe standards

It is a collection of standards managed by a consortium responsible for its development. It is currently the industry standard for PCIe solid state drives and includes form factors, for example, standard 2.5" U.2 form factor, internal mounted M.2, Add-In Card (AIC), and more.

Also, many interesting developments are happening in the added features of the protocol. Some include multiple queues, combining IOs, defining ownership and prioritization process, multipath and virtualization of Input/ Outputs, and capturing asynchronous device updates.

The standard is being used in more use cases, and one such example is Zoned Storage and Zoned namespace SSDs. Also, the NVMe protocol is not just limited to connecting flash drives but can also be used as a networking protocol or NVMe over Fabrics. This provides a high-performance storage networking fabric, a common framework for a variety of transports.

NVMe Zoned Namespace ZNS

NVMe ZNS is a technical proposal by the NVM Express organization to address large data management in big infrastructure deployments by moving intelligent data placement from host to drive.

For this purpose, it divides the namespace LBA into zones that must then be written sequentially. This specification provides the following benefits over traditional NVMe SSD. Some of them are mentioned below:

- Does write amplification reduction and thus provides higher performance.

- Provides higher capacities by lowering over-provisioning.

- Has improved latency.

- Reduced SSD controller DRAM footprint and hence cost you less.

NVMe over Fabrics

- NVMe is not just faster flash storage but also an end-to-end standard, making it easier to transport data between storage systems and servers more efficiently and quickly.

- NVMe's performance and the time it takes for some data to get to its destination across the network benefits across network fabrics such as Ethernet, Fibre Channel, and InfiniBand.

- It gives greater IOPS and reduces the latency (and the time it takes for some data to get to its destination across the network) from the main software stack through the Data Fabric to the storage array.

NVMe over Fibre Channel

Today NVMe is preferred over Fibre Channel support because of the recent release of NetApp "ONTAP", NetApp's AI data management platform. Because of its performance and reliability, many enterprises have built their entire infrastructure around Fibre Channel. Also, its support for fabric-based zoning and name services has made it succeed.

The speed of applications such as databases runs much faster when NVMe is used compared to FCP (SCSI protocol with an underlying Fibre Channel connection). It is also easy to get started with NVMe. It is simply a non-disruptive software upgrade which helps a lot.

NVMe is Important for your Business

Enterprises are usually data-starved at the start. But the exponential rise in data and demands from the new application can bog SSD down. Even a high-performance SSD connected to conventional protocols experience higher latencies, low quality, low efficiency, and poor service when confronted with some of the new fast data challenges.

Conventional protocols consumed many CPU cycles to process data and made them available to the applications. These wasted computers cost businesses real money and thus leading them to higher investment costs. Businesses' Infrastructure budgets don't grow at the pace of data.

Database for an organization grows tremendously for a growing organization. Thus organizations were under tremendous pressure to maximize returns on infrastructure. However, NVMe's unique features help avoid the bottlenecks for everything, starting from scaling up data in traditional databases to emerging edge computing.

It can handle rigorous workloads with a smaller infrastructure footprint and is well able to meet new data demands, thus reducing the organization's total cost of ownership and accelerating business growth. Designed for high-performance memory, NMVe is the only protocol that stands out in highly demanding and computes-intensive enterprises and cloud ecosystems.

Conclusion

NVMe enables customers to utilize the full potential of flash storage drives, for example, input/output performance, by supporting more processor cores, lanes per drive, input/output threads, and queues. This technology significantly enhances data transfer speeds and reduces latency, making it an ideal choice for applications that require high-performance storage solutions, such as databases, virtual machines, and big data analytics. If you're looking to boost your server's performance, consider opting for NVMe VPS hosting, which leverages NVMe technology to deliver superior speed and efficiency compared to traditional SSD or HDD solutions.

By choosing NVMe VPS, you ensure that your applications run faster and more efficiently, providing a seamless experience for your users.

It efficiently eliminates SCSI and ATA input/ output command overhead processing, and implements simplified command processing. All commands are of the same and in the same location. Hence I conclude that NVMe is less complex, more efficient, and easy to use compared to legacy systems.

People also read: