If you’ve ever faced performance challenges while developing an application, you probably know how quickly traditional databases like MySQL or PostgreSQL start to struggle under heavy traffic loads. That’s where in-memory storage technologies such as Redis and Memcached come into play, bringing your system’s response time down to the millisecond level. But the real question is: Redis or Memcached? In this post, we’ll compare these two systems and, with a deep dive into their architecture, performance, and features, map out the best path forward for your project’s future.

What Is Redis?

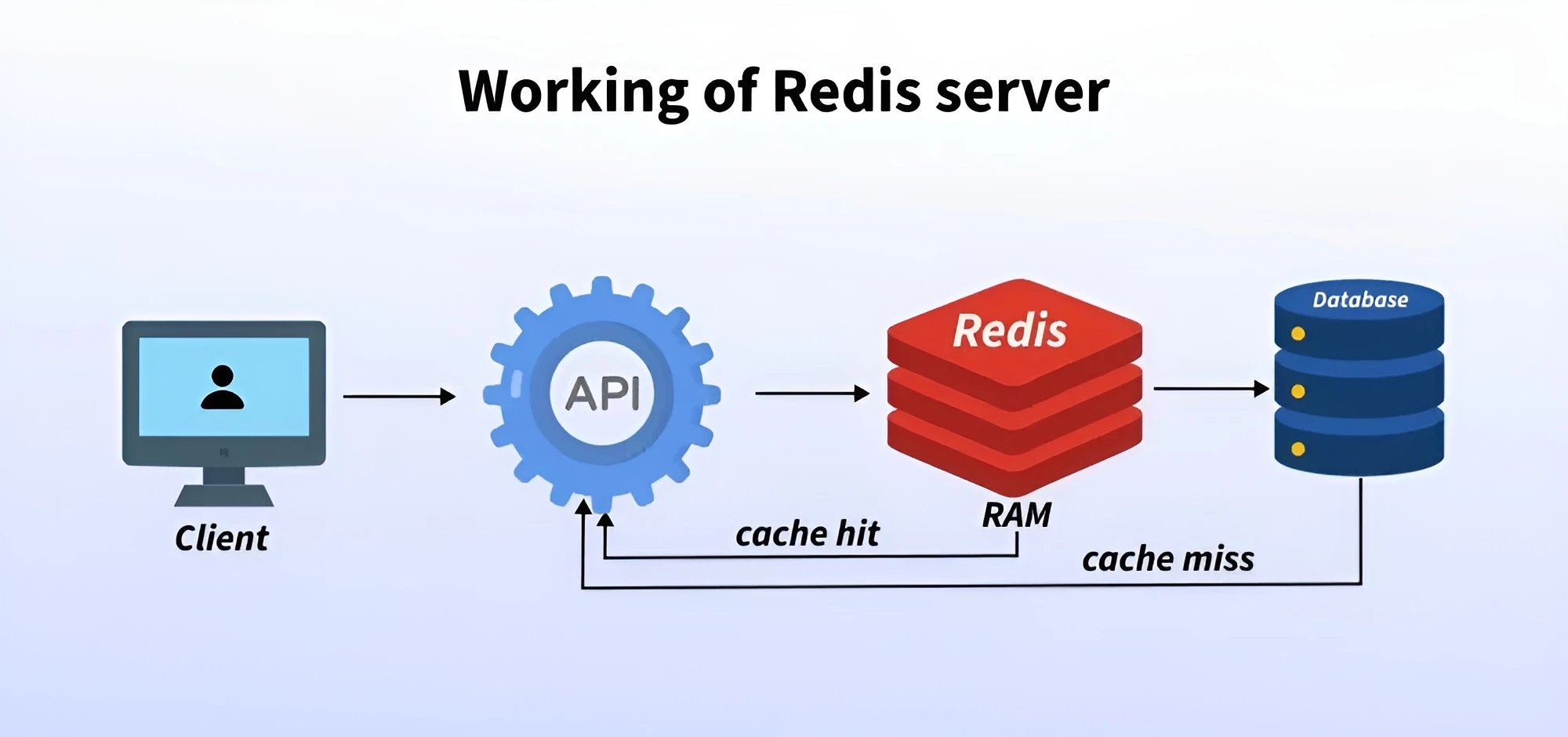

Redis is essentially a data structure server that can act as a database, a cache, and a message broker. Put more simply, Redis is an in-memory data store that keeps data in RAM to deliver much faster response times than disk-based databases. Over the years, Redis has evolved significantly, and today it even includes capabilities for artificial intelligence and vector search.

Data structures that make Redis stand out

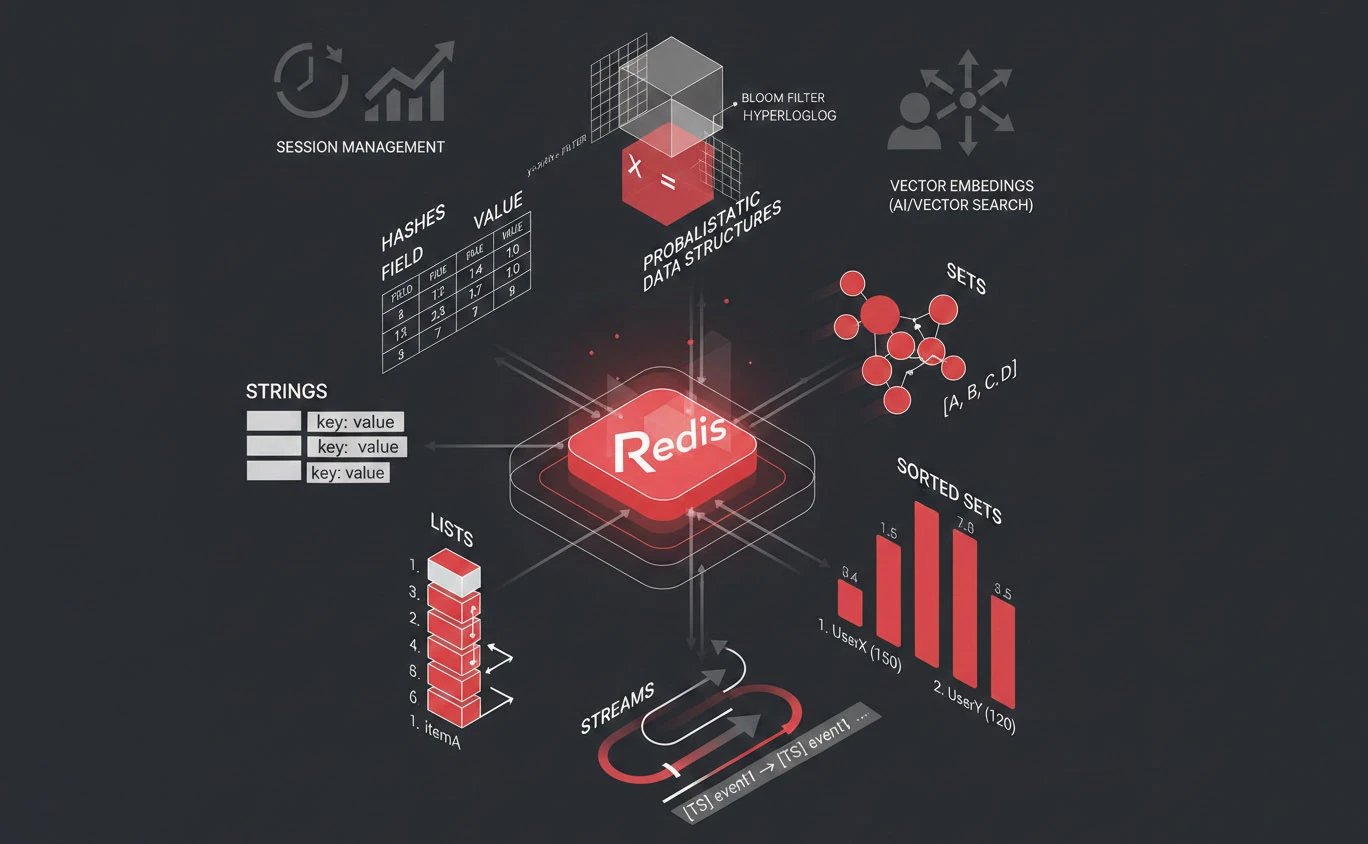

One of the reasons you’ll likely fall in love with Redis is the wide variety of data structures it offers. Unlike older systems that only handle simple strings, Redis allows you to move part of your application logic into the storage layer. This reduces network round-trips and significantly improves overall performance.

-

Strings: The most basic data type, capable of storing up to 512 MB of text or binary data.

-

Lists: An ordered collection of strings with constant-time insertion and removal from both ends ($O(1)$), making them ideal for message queues.

-

Hashes: Field–value structures designed for storing objects, allowing individual fields to be updated without fetching the entire object.

-

Sets: Collections of unique strings that support server-side set operations such as union and intersection.

-

Sorted Sets: Each member has a score, and elements are ordered by that score, perfect for leaderboards and ranking systems.

-

Streams: A powerful data structure for managing logs and real-time messages, introduced in version 5 and further evolved in recent releases.

-

Probabilistic data structures: Tools like Bloom Filters and HyperLogLog that enable membership checks or unique user estimation using minimal memory.

Smart use cases for Redis: Redis can be used across different parts of your infrastructure. For session management, its high speed and data persistence make it a top choice. In real-time analytics, sorted sets provide instant insights into trends. Additionally, with the introduction of Vector Search in version 8, Redis can serve as a vector database for machine learning projects and semantic search.

Learn more about how Redis communicates over the network and its default connection settings in What is Redis Port post blog.

What is Memcached?

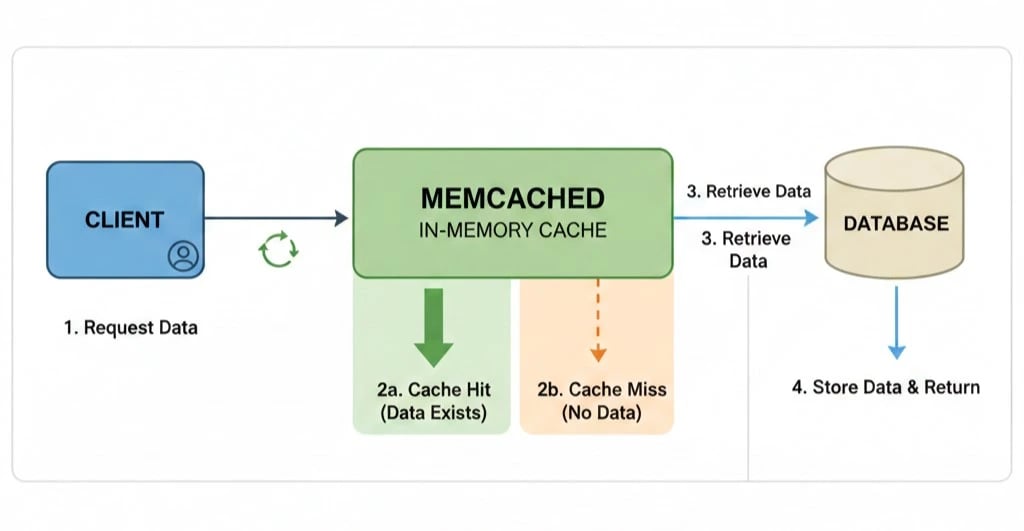

If Redis is a general-purpose tool, Memcached is a specialized one that does a single job, but does it with maximum speed and simplicity. Memcached is a distributed in-memory object caching system, originally designed in 2003 for the LiveJournal website, and it is still used today by giants like Facebook.

The biggest advantage Memcached offers is its multi-threaded architecture. In addition, it uses a memory management model called Slab Allocation, in which memory is divided into fixed-size chunks to prevent fragmentation.

Limitations and strengths of Memcached

It’s important to remember that Memcached only understands strings. If you want to store complex objects, you must serialize them in your application (for example, into JSON or a binary format) before sending them to Memcached. Also, Memcached has no concept of data persistence; once the server restarts, the entire cache is lost.

Discover other high-performance in-memory solutions you can use instead of Redis in Redis Alternatives post.

Key Differences Between Redis and Memcached

Now that we’re familiar with both systems, let’s dive into their most important differences. These distinctions determine when one has an advantage over the other.

Architecture and Design Philosophy

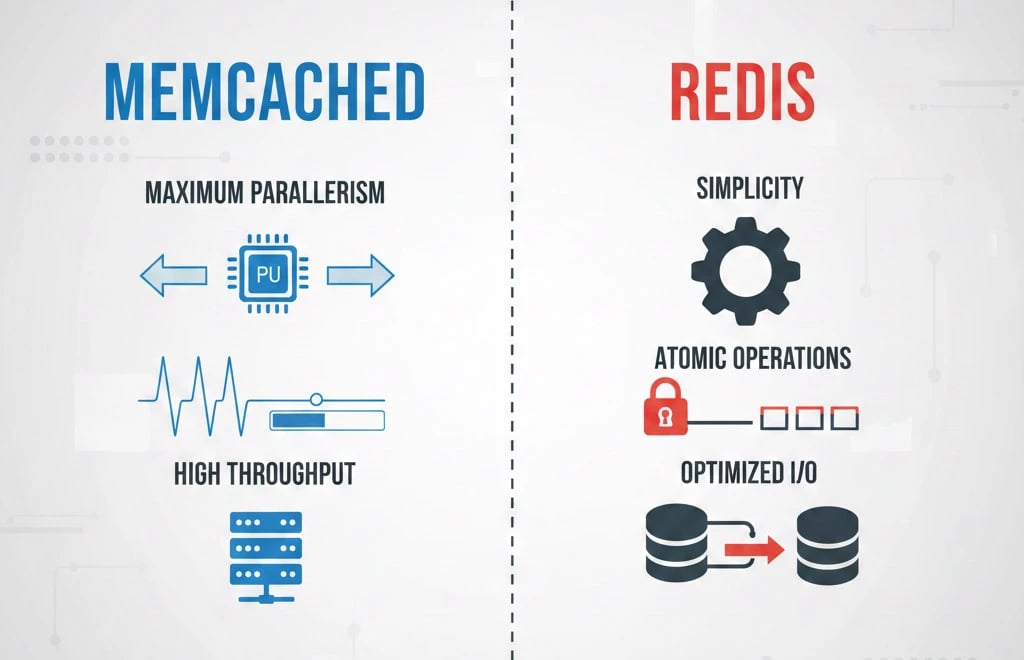

Memcached is multi-threaded and can use all available CPU cores (for example, 16 cores) within a single instance. This makes it very powerful for workloads involving large volumes of simple read and write operations.

Redis, on the other hand, is inherently single-threaded. At first glance, this may seem like a weakness, but it actually eliminates complex locking mechanisms and ensures simplicity and strong atomicity of operations. Starting from version 6, Redis introduced multi-threaded I/O to reduce network overhead, though command execution itself remains single-threaded. In version 8.0, this mechanism has been further optimized, significantly reducing command latency.

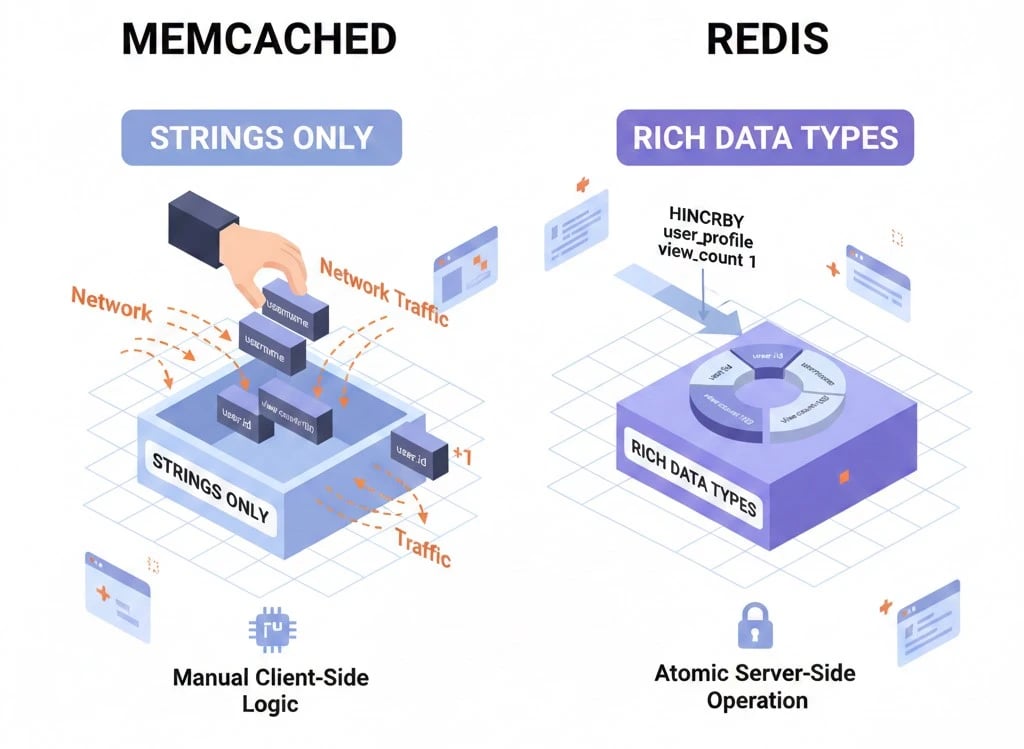

Data Types and Flexibility

As mentioned earlier, Redis supports rich data types, while Memcached only understands strings. But how does this affect your work? Imagine you want to update a “view count” field in a user profile. In Memcached, you must fetch the entire profile object from memory, deserialize it, increment the view count, serialize it again, and send it back to the server. In Redis, you simply send the HINCRBY command, and all of this happens atomically on the server side. The result: less network traffic and higher performance.

Memory Management

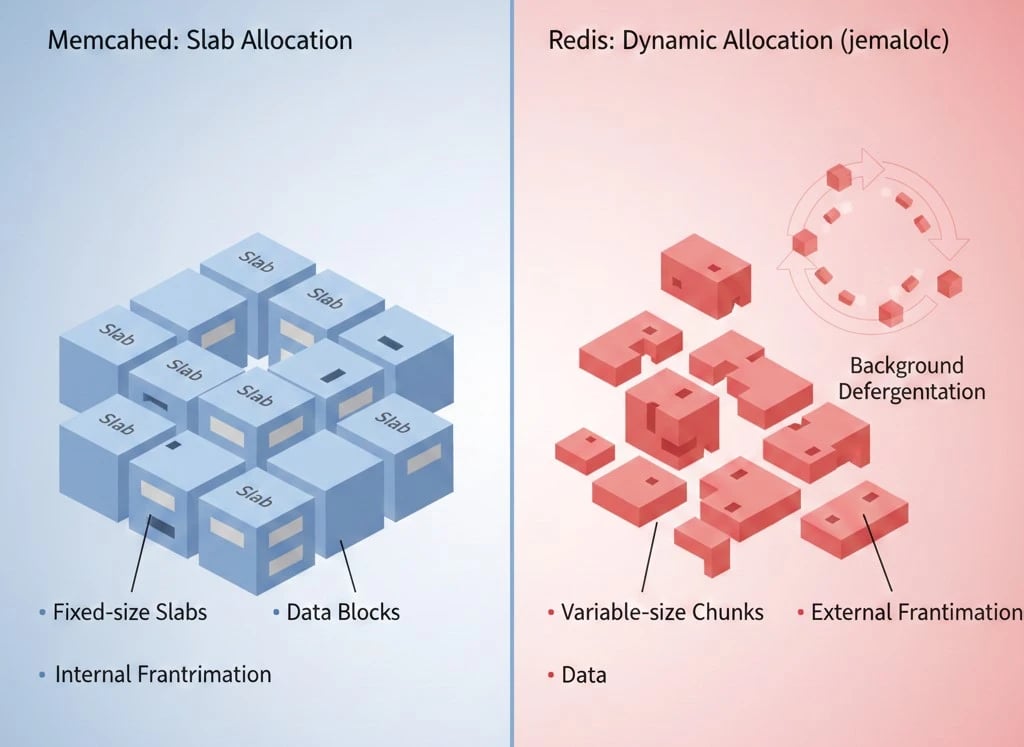

Memcached uses a mechanism called Slab Allocation. With this approach, memory is divided into chunks of predefined sizes, and your data is placed into the closest available size. This prevents RAM fragmentation, but it may waste some space within each slab.

Redis, however, uses dynamic memory allocation (with libraries such as jemalloc). It allocates memory exactly proportional to the size of your data. While this is more space-efficient initially, over time (due to frequent insertions and deletions) it can lead to fragmentation. Redis addresses this with a background memory defragmentation process to mitigate the issue.

Eviction Policies: Smart Memory Management

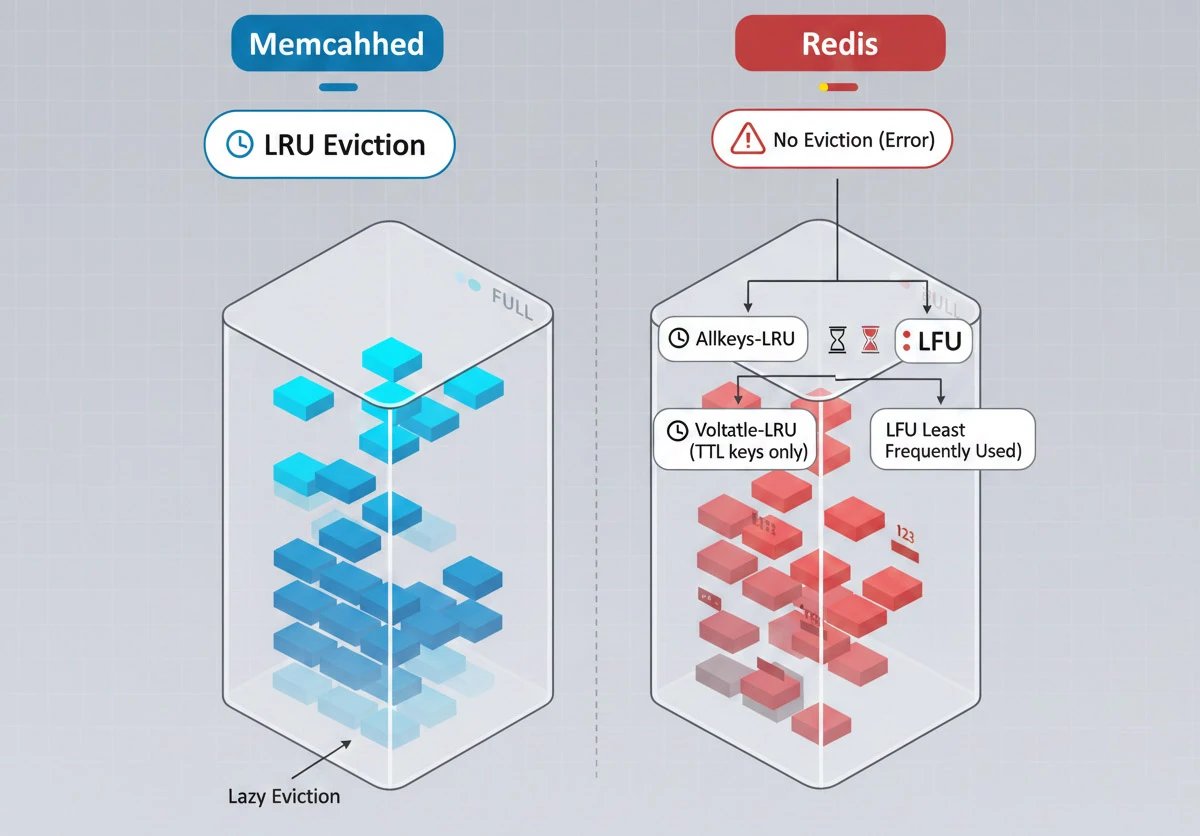

RAM is expensive and always limited. When memory is full, these systems need to decide what to remove to make room for new data. Both support the LRU (Least Recently Used) algorithm.

However, Redis gives you far more flexibility. You can choose from several eviction policies, including:

-

No-eviction: Return an error when memory is full; do not remove anything.

-

Allkeys-lru: Remove the least recently used keys from the entire dataset.

-

Volatile-lru: Remove only keys that have an expiration time (TTL) set.

-

LFU (Least Frequently Used): Remove the least frequently accessed keys, even if they’re relatively new.

Memcached primarily relies on LRU and operates in a lazy manner, it avoids eviction until absolutely necessary and performs cleanup lazily to minimize performance impact.

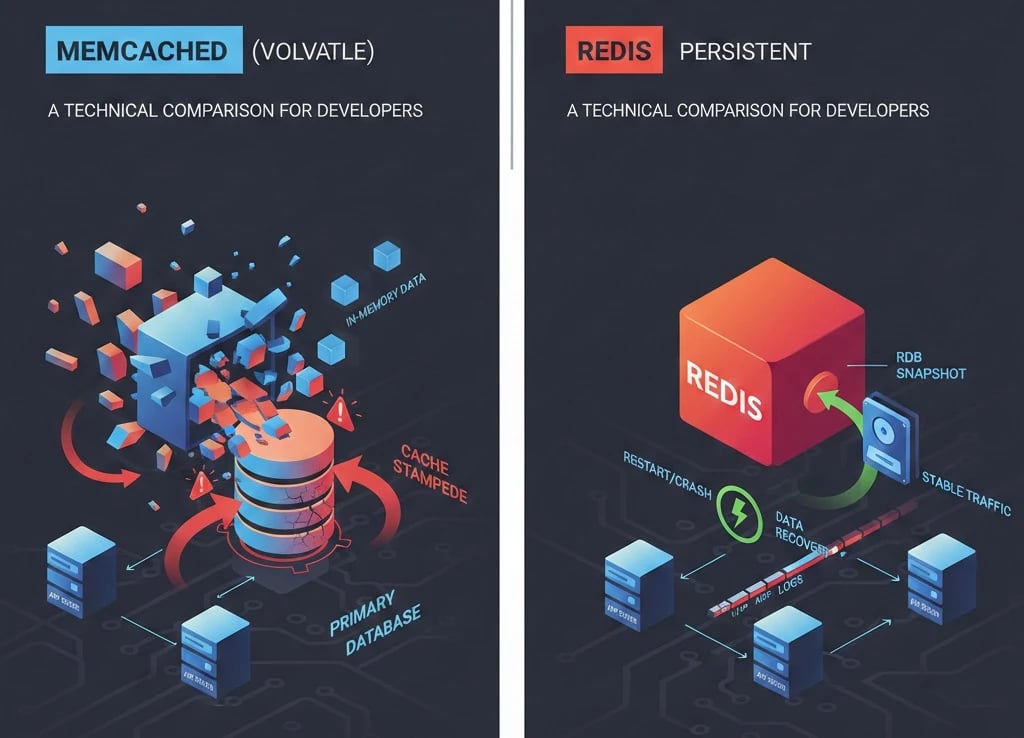

Data Persistence

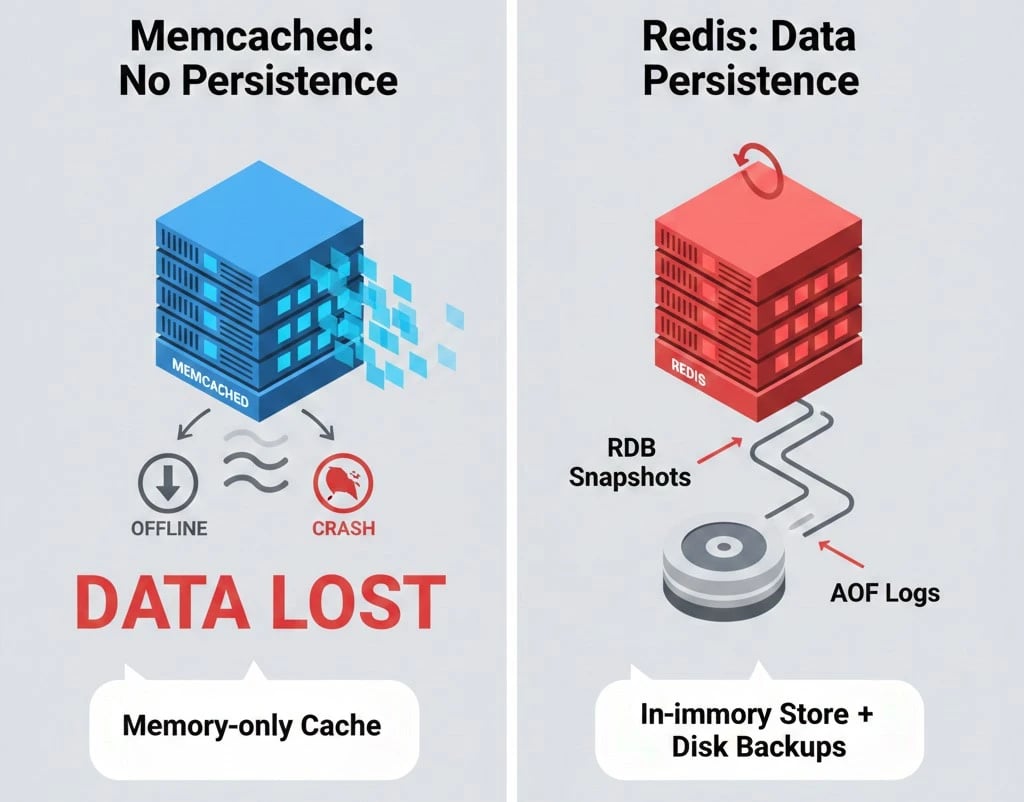

Redis can persist data to disk (for example, by creating snapshots in dump.rdb files or using the AOF mechanism). This ensures data is not lost when the server restarts. Memcached, however, has no disk persistence at all, if the server shuts down or crashes, all in-memory data is lost.

If your project requires data to survive restarts or you want disk-backed safety, Redis is the better choice.

Data Durability and Disaster Recovery

If your cache contains data that is expensive or time-consuming to rebuild from the primary database (such as heavy analytical reports), Redis is the clear winner. Redis offers two persistence mechanisms:

-

RDB (Redis Database): Periodic snapshots of the entire dataset at specified intervals.

-

AOF (Append Only File): Logs every write command to a file, which is replayed when the server restarts.

Memcached has neither of these. If a Memcached server crashes, your primary database comes under heavy load to repopulate the cache, a problem known as a cache stampede.

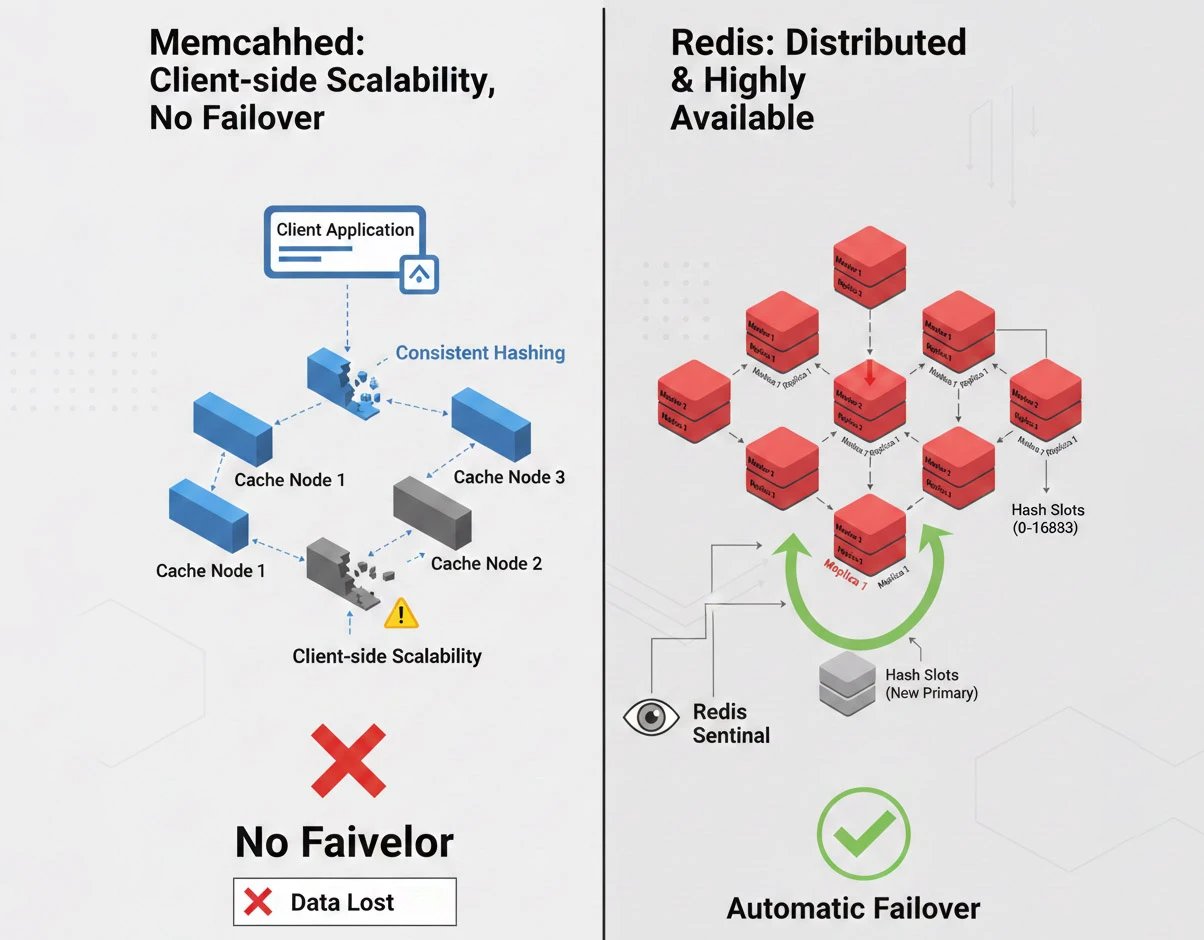

Scalability and High Availability

Redis natively supports clustering through Redis Cluster, which divides data across 16,384 hash slots and distributes them among multiple nodes. Redis also includes Sentinel, a monitoring system that watches your servers and automatically promotes a replica to master if the primary node fails (failover).

Memcached, by contrast, relies on the client for horizontal scalability. You provide the client with a list of servers, and it distributes data using consistent hashing. If a Memcached server goes down, the client simply notices that part of the data is gone, there is no automatic failover or replacement.

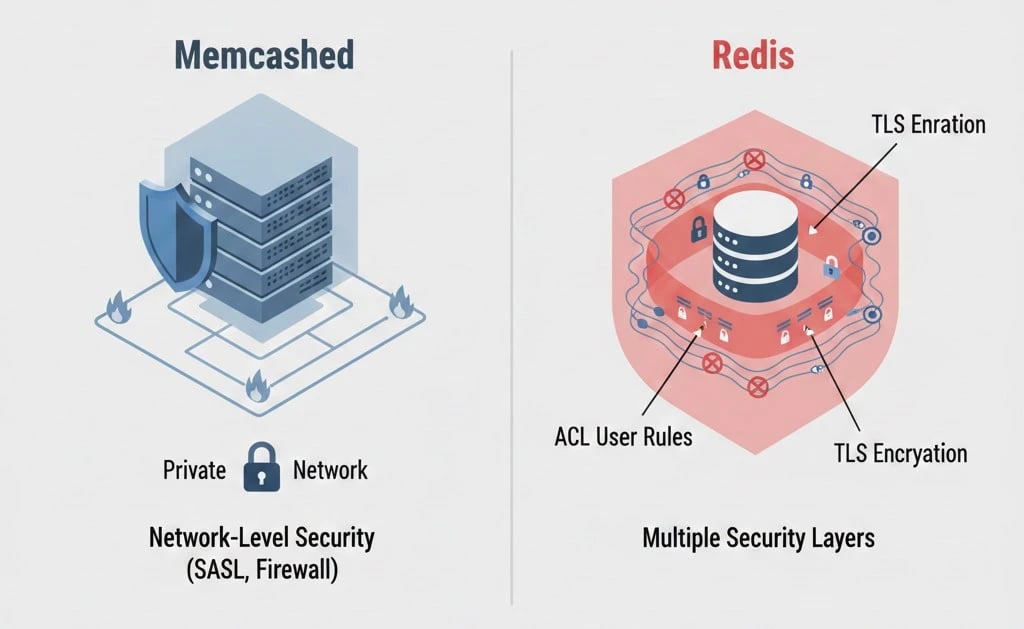

Security

Never expose Redis or Memcached directly to the public internet without a protective layer. These tools are designed for speed, not security.

Memcached uses very simple protocols and relies on basic authentication mechanisms like SASL, which can be cumbersome to configure. As a result, Memcached security is typically enforced at the network or firewall level.

Redis is in a somewhat better position. In newer versions (especially from 6.0 onward), Redis supports ACLs (Access Control Lists), allowing you to precisely define which users can execute which commands. Native TLS support also ensures that your data is encrypted while in transit over the network.

To explore how Redis compares with document databases for structured data and persistence, check out Redis vs MongoDB post.

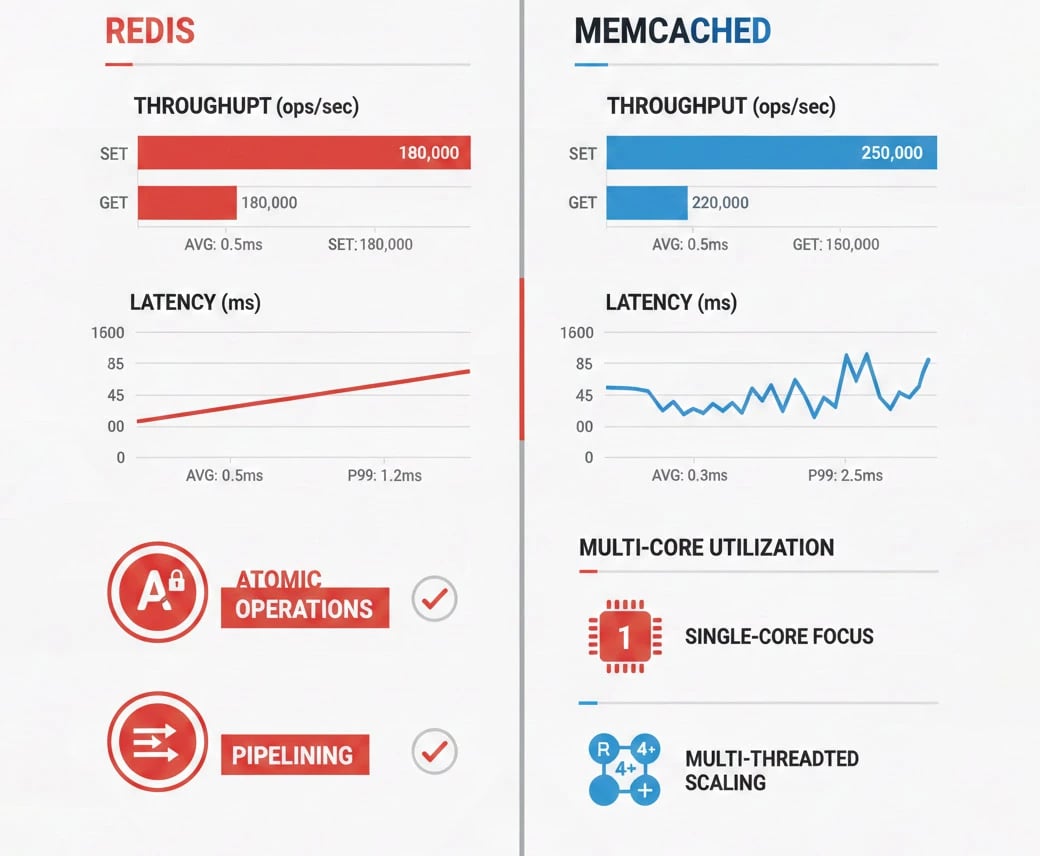

Memcached vs Redis performance and Benchmark

Let’s be honest, both systems are so fast that in most projects, they are unlikely to be your primary bottleneck. Latency for both is in the microsecond range. According to 2025 benchmarks, Memcached can handle around 250,000 read operations per second on a typical server. Redis, in normal conditions, manages about 180,000 operations per second. But here’s the golden point: if you use Redis pipelining (sending, for example, 10 commands in a single batch), Redis can reach an incredible 800,000 operations per second, surpassing Memcached.

|

Performance Metric |

Redis |

Memcached |

Technical Explanation |

|

Throughput (SET) |

~100,000 ops/sec |

~180,000–220,000 ops/sec |

Memcached is faster due to a simpler protocol and no data structure processing |

|

Throughput (GET) |

~120,000–150,000 ops/sec |

~200,000–250,000 ops/sec |

Memcached has an edge for simple GETs |

|

Average Latency |

~0.2–0.4 ms |

~0.1–0.3 ms |

Difference is minimal and usually imperceptible to end users |

|

High-load Latency (P99) |

More stable |

Less stable |

Redis behaves more predictably under high pressure |

|

Atomic Operations |

Very fast (native) |

Not supported |

Redis performs atomic operations without external locks |

|

Pipeline / Batch Ops |

Excellent |

Limited |

Redis is highly optimized for batch operations |

|

Multi-core Utilization |

Moderate (single-threaded core) |

Excellent (multi-threaded) |

Memcached can leverage multiple cores simultaneously |

Raw benchmarks often show Memcached as faster, because it only performs simple key–value operations without extra processing. However, in real-world applications, Redis offers more stable and reliable performance, especially when handling concurrent operations or complex data types. In the end, speed differences are rarely decisive; the right choice mostly depends on your use case and system architecture needs.

For a deeper look at how Redis compares with streaming and messaging systems in real-world performance, read our Redis vs Kafka blog post.

when to Use Redis and When to Use Memcached

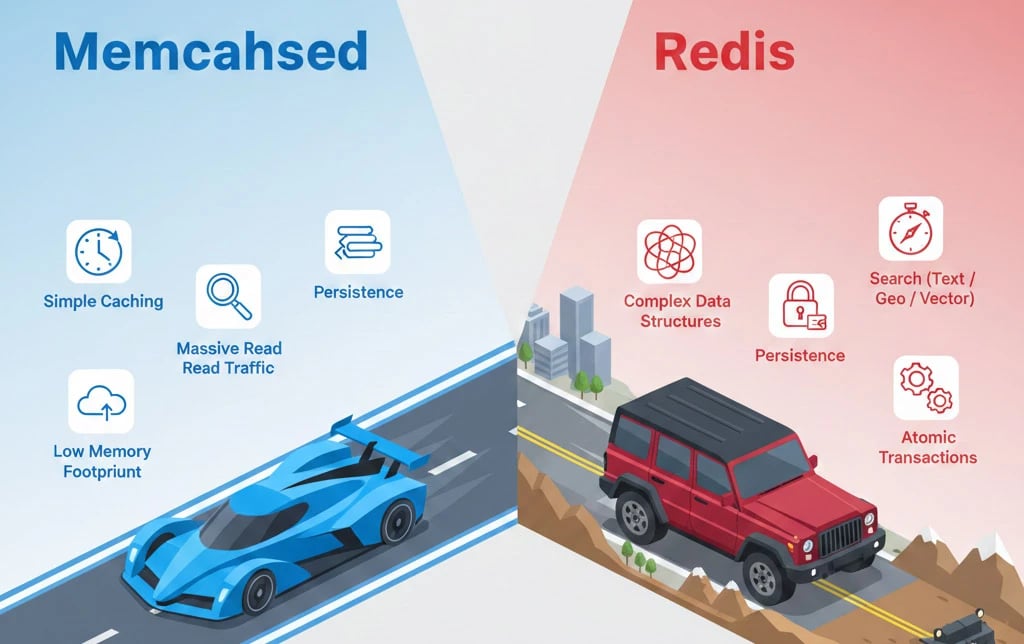

Choosing between these two tools is like choosing between a race car (Memcached) and a luxury, powerful SUV (Redis). Both are fast, but they are built for different roads.

Scenarios Where You Should Choose Redis

If your project has any of the following characteristics, Redis is undoubtedly the way to go:

-

Complex data structures: You need queues, leaderboards, sets without duplicates, or multi-field hashes.

-

Critical data persistence: If your cache is emptied, the main database cannot rebuild it quickly, or data (like shopping carts) must not be lost.

-

Real-time capabilities: You want to implement chat systems, notifications, or streaming services.

-

Advanced search: You need full-text search, geospatial search, or vector search for AI applications.

-

Transactions and atomicity: Multiple operations must execute in an “all-or-nothing” manner.

In general, Redis offers more features but is more complex to maintain.

Scenarios Where Memcached Is a Better Choice

Memcached shines when you are looking for ultimate simplicity:

-

Simple object caching: You only want to cache HTML pages or API responses, without any server-side processing.

-

Massive read traffic: You have a website receiving millions of simple read requests per second and want to fully utilize your server’s CPU.

-

Memory constraints: In environments where RAM is very limited and even a 20% savings matters.

-

Legacy infrastructure: If your team has been using Memcached for years and doesn’t need Redis’s advanced features.

Note: Many large systems use both simultaneously. For example, Memcached might be used for caching static pages and user sessions, while Redis handles more specialized tasks like message queues or advanced data features. Depending on your project’s needs and the balance between complexity and performance, a hybrid approach can give you the best of both worlds.

Check out our detailed comparison Redis vs Hazelcast to see how Redis stacks up against other in-memory solutions

Final Conclusion: Which Way Should You Go?

For 80% of today’s projects, Redis is the smarter choice. Its diverse data structures, stronger security, advanced AI capabilities, and support for persistent storage make Redis much more than just a cache.

However, if you’re working on the cutting edge and have a system that requires massive scaling (hundreds of gigabytes of simple cache), want minimal management complexity, and are only dealing with strings, Memcached remains a classic, stable, and ultra-fast option.

Remember, in 2026, we no longer live in an “either-or” world. Many large systems use hybrid architectures: Memcached for the first caching layer (extremely fast and volatile) and Redis for the second layer, managing structured data.

![What Is Cold Data Storage? ❄️ [2026 Guide] What Is Cold Data Storage? ❄️ [2026 Guide]](https://1gbits.com/cdn-cgi/image//https://s3.1gbits.com/blog/2026/02/what-is-cold-data-storage-750xAuto.webp)

![What Is Virtual Desktop Infrastructure? 🖥️ [VDI Explained] What Is Virtual Desktop Infrastructure? 🖥️ [VDI Explained]](https://1gbits.com/cdn-cgi/image//https://s3.1gbits.com/blog/2026/02/what-is-virtual-desktop-infrastructure-vdi-750xAuto.webp)