In the dynamic landscape of modern computing, two titans stand at the forefront: traditional virtualization vs containerization. These methodologies have revolutionized how we deploy and manage applications, shaping the very essence of IT infrastructure. But what is containerization vs virtualization? Let's delve into the heart of the matter, exploring the nuances of hardware virtualization vs containerization, the stark difference between virtualization vs containerization, and their respective impacts on the digital realm.

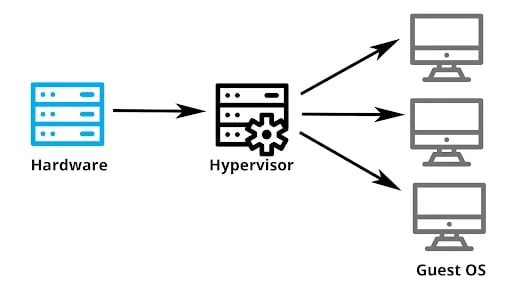

Traditional virtualization, a stalwart of the industry, employs hypervisors to emulate hardware, enabling multiple operating systems to coexist on a single physical machine. On the other hand, containerization takes a more lightweight approach, encapsulating application virtualization vs containers and their dependencies within isolated environments known as containers. This fundamental difference between virtualization and containerization sparks a debate that reverberates throughout the realm of IT.

In the realm of hardware virtualization vs containerization, each approach boasts its own set of pros and cons. Traditional virtualization excels in providing robust isolation and compatibility across diverse environments. However, its resource-intensive nature can lead to overhead and inefficiencies. Conversely, containerization thrives on agility and resource efficiency, allowing for rapid deployment and scaling. Yet, it may sacrifice some degree of isolation compared to its virtualization counterpart.

When it comes to virtualization vs containerization in cloud computing, both paradigms find their place in the sun. Virtualization paved the way for cloud computing's inception, offering the flexibility and abstraction needed to virtualize entire infrastructure stacks. However, containerization, spearheaded by technologies like Docker, has surged in popularity, offering unparalleled speed and portability for cloud-native applications.

In the realm of devops, virtualization vs containerization pros and cons are indispensable tools for modern software development and deployment pipelines. While virtualization streamlines the provisioning of entire virtual machines, containerization, with its Docker containers, provides a lightweight alternative, facilitating the rapid deployment of microservices and applications.

As we navigate the labyrinth of virtualization vs containerization, one thing becomes abundantly clear: each has its own strengths and weaknesses. Whether you opt for the robust isolation of traditional virtualization or the nimble efficiency of containerization, the choice ultimately hinges on the unique requirements of your infrastructure and applications.

In conclusion, the dichotomy between virtualization vs containerization continues to shape the fabric of modern computing. From pros and cons of virtualization vs. containerization of the service to their respective roles in cloud computing and devops, the debate rages on, driving innovation and evolution in the digital landscape.

What is a Container?

In the realm of modern computing of virtualization vs containerization, the term "container" has emerged as a beacon of innovation, revolutionizing the way we deploy and manage applications. But what exactly is a container, and how does it differ from its virtualization counterpart?

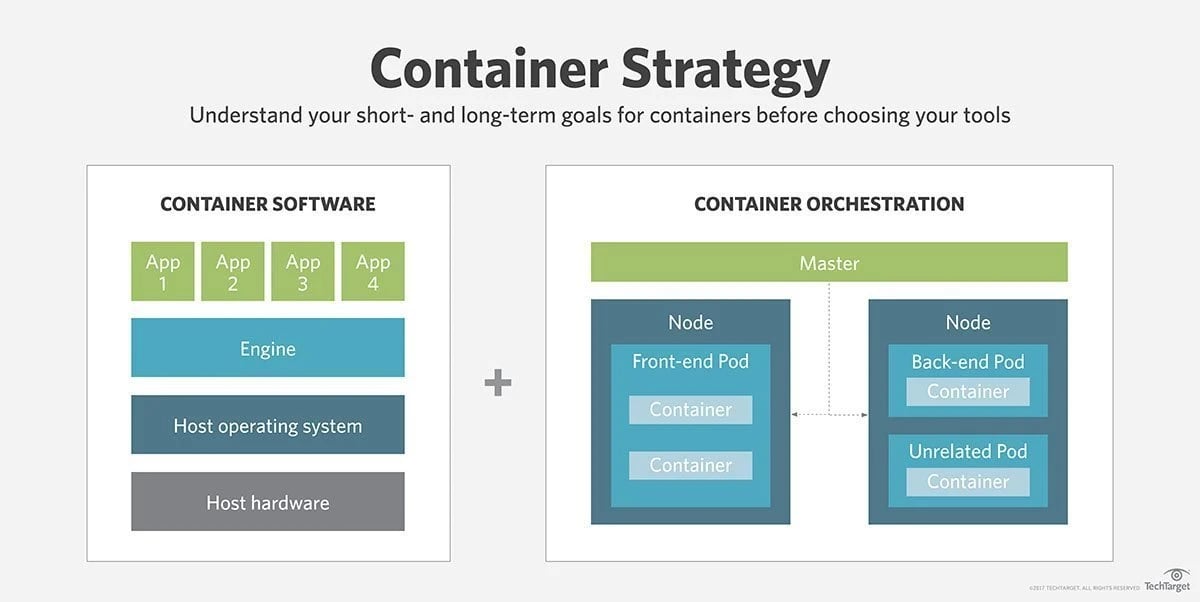

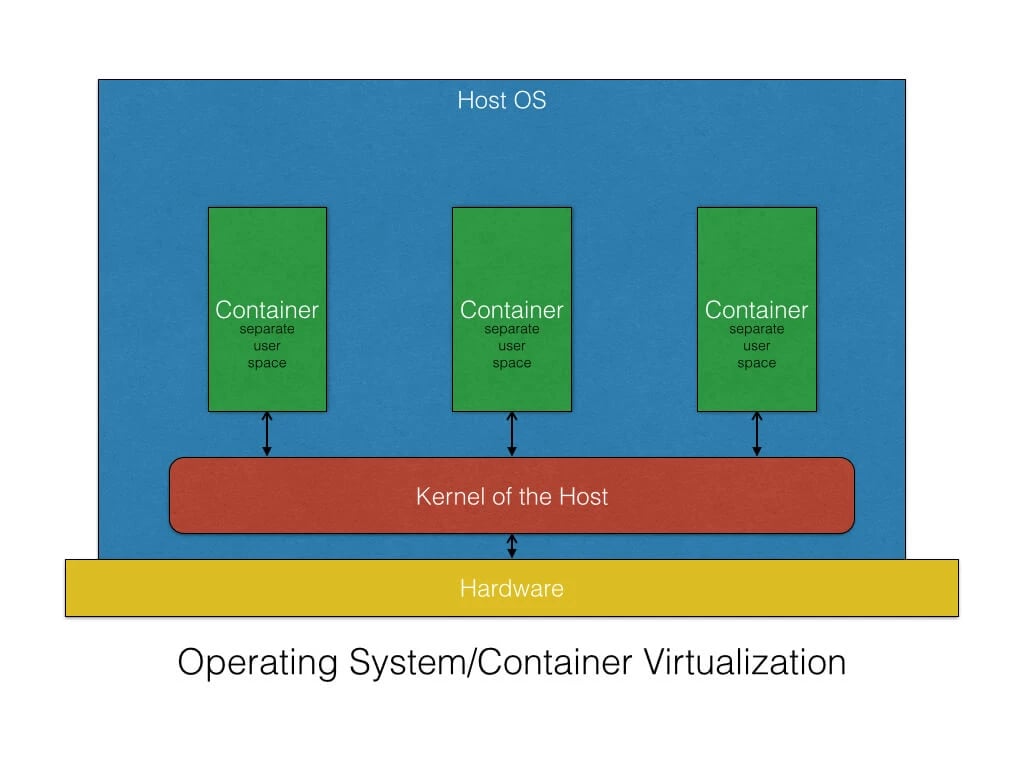

At its core, a container is a lightweight, portable, and self-sufficient unit that encapsulates an application along with its dependencies, configurations, and runtime environment. Unlike traditional virtualization, which relies on hypervisors to emulate hardware, containers leverage a shared operating system kernel, enabling them to operate with minimal overhead and resource utilization.

The difference between virtualization vs containerization becomes apparent when examining their underlying principles. While traditional virtualization abstracts physical hardware to create isolated virtual machines, containerization abstracts the operating system to create isolated environments for applications. This fundamental distinction results in a more efficient utilization of resources and faster deployment times for containers compared to virtual machines.

Enter Docker, the poster child of containerization. Docker has democratized the adoption of container technology, providing developers with a user-friendly platform to build, ship, and run applications within containers. With Docker, the complexities of traditional virtualization are streamlined, allowing for seamless integration into development workflows and production environments.

In the realm of cloud computing, virtualization vs containerization takes center stage once again. While virtualization paved the way for cloud infrastructure by abstracting physical hardware, containerization adds another layer of abstraction, enabling even greater agility and scalability. Virtualization may excel in providing complete isolation and compatibility, but containerization shines in its ability to rapidly provision and scale applications, making it the preferred choice for cloud-native architectures.

As with any technology, virtualization vs containerization comes with its own set of pros and cons. Virtualization offers robust isolation and security, making it suitable for legacy applications and environments with strict compliance requirements. However, its resource-intensive nature and slower deployment times may pose challenges in highly dynamic environments. On the other hand, containerization boasts unparalleled speed and efficiency, but may sacrifice some degree of isolation and security compared to virtualization.

When it comes to containerization vs application virtualization, the distinction lies in their scope and approach. While application virtualization focuses on encapsulating individual applications for portability and compatibility, containerization provides a more comprehensive solution, encompassing the entire runtime environment along with the application.

In the realm of devops, virtualization and containerization play vital roles in accelerating the software development lifecycle. By using Docker and container orchestration platforms like Kubernetes, devops teams can automate the deployment, scaling, and management of containerized applications with ease, fostering collaboration and innovation across the organization.

In summary, containers represent a paradigm shift in the world of computing, offering a more agile, efficient, and scalable alternative to traditional virtualization. Whether you're exploring virtualization vs containerization in cloud computing or harnessing the power of Docker for devops, containers continue to redefine the boundaries of what's possible in the digital age.

What Is Containerization?

In the vast landscape of modern computing of virtualization vs containerization, containerization stands as a beacon of innovation, reshaping how we package, deploy, and manage applications. But what exactly is containerization, and how does it differentiate itself from the traditional approach of virtualization?

At its essence, containerization is a methodology that encapsulates applications and their dependencies within self-contained units known as containers. Unlike traditional virtualization, which relies on hypervisors to emulate hardware and create isolated virtual machines, containerization operates at the operating system level, leveraging shared resources and providing lightweight, portable environments for applications to run.

The virtualization and containerization difference becomes evident when considering their underlying architectures. While virtualization abstracts physical hardware to create multiple virtual machines, each with its own operating system, containerization abstracts the operating system itself, enabling multiple containers to share the same kernel while remaining isolated from one another.

Enter Docker, the game-changer in the realm of containerization. Docker has revolutionized the way developers build, ship, and run applications by providing a user-friendly platform for creating and managing containers. With Docker, the complexities of traditional virtualization are minimized, allowing for rapid deployment and scalability of applications in diverse environments.

When exploring the differences between virtualization and containerization, it's crucial to consider their respective impacts on cloud computing. While virtualization laid the foundation for cloud infrastructure by abstracting physical hardware and enabling resource pooling, containerization takes it a step further, providing even greater agility and efficiency in cloud-native environments.

In the realm of cloud computing, containerization vs virtualization becomes a topic of debate. While virtualization excels in providing complete isolation and compatibility, containerization offers faster deployment times and greater resource efficiency, making it the preferred choice for modern cloud architectures.

In the realm of virtualization and containerization in devops, virtualization and containerization play integral roles in streamlining the software development lifecycle. By leveraging tools like docker containerization vs virtualization and container orchestration platforms such as Kubernetes, virtualization and containerization in devops teams can automate the deployment, scaling, and management of containerized applications with ease, promoting collaboration and agility within organizations.

In summary, containerization represents a paradigm shift in the world of computing, offering a more agile, efficient, and scalable alternative to traditional vs virtualization vs containerization. Whether you're exploring containerization vs virtualization in cloud computing or harnessing the power of virtualization vs containerization docker for devops, containers continue to redefine the landscape of IT infrastructure and application deployment.

How Does Containerization Work?

At its core, containerization is a methodology that enables the packaging and deployment of applications along with their dependencies into self-contained units called containers. But how does it work, and what sets it apart from traditional virtualization, especially in the context of cloud computing?

First, let's distinguish between virtualization and containerization in cloud computing. Virtualization, a tried-and-tested approach, involves emulating hardware to create multiple virtual machines (VMs) on a single physical server. Each VM runs its own operating system, allowing for the isolation of applications and resources. Containerization, on the other hand, operates at the operating system level, leveraging shared resources and enabling multiple containers to run on a single host OS. This fundamental difference makes containerization more lightweight, efficient, and scalable compared to traditional virtualization.

Now, let's delve into how containerization works in cloud computing, where scalability, flexibility, and resource efficiency are paramount. In a cloud environment, virtualization and containerization technologies work hand in hand to optimize resource utilization and streamline application deployment.

Virtualization in cloud computing lays the groundwork by abstracting physical hardware and enabling the creation of virtualized infrastructure, including compute, storage, and networking resources. This allows for the efficient allocation of resources to VMs, ensuring workload isolation and flexibility.

Containerization in cloud computing takes efficiency to the next level by abstracting the operating system and packaging applications into lightweight, portable containers. These containers contain everything needed to run the application, including code, runtime, system libraries, and dependencies. virtualization and containerization using docker, developers can build, ship, and run applications consistently across different environments, from development laptops to production servers in the cloud.

The beauty of containerization in cloud computing lies in its agility and scalability. Containers can be quickly deployed, scaled, and managed using container orchestration platforms like Kubernetes, which automates tasks such as deployment, scaling, and load balancing. This enables organizations to adapt to changing demands and deliver applications faster, all while optimizing resource usage.

In summary, containerization in cloud computing offers a modern approach to application deployment and management, complementing traditional virtualization technologies. By leveraging Docker and container orchestration platforms, organizations can unlock the full potential of cloud computing, driving agility, scalability, and innovation in the digital era.

Container virtualization example

Let's explore a captivating example of container virtualization and how it distinguishes itself from traditional virtualization:

Imagine you're a software developer tasked with deploying a complex web application that consists of multiple microservices. Traditionally, you might opt for virtualization to isolate each component of the application within its own virtual machine (VM). However, this approach can be resource-intensive and cumbersome to manage.

Enter containerization, a revolutionary alternative to traditional virtualization. With container virtualization, you can encapsulate each microservice and its dependencies into lightweight, portable containers. These containers share the host operating system's kernel, resulting in a more efficient use of resources compared to virtual machines.

Let's delve deeper into this example:

Efficiency

In traditional virtualization, each VM requires its own operating system, leading to overhead and resource wastage. In contrast, container virtualization leverages a shared kernel, enabling multiple containers to run on the same host without the need for additional OS instances. This efficiency makes containerization an attractive option for deploying microservices-based applications, where resource optimization is crucial.

Isolation

While virtualization provides strong isolation between VMs, containerization offers a similar level of isolation but with lower overhead. Each container operates independently, ensuring that changes or failures in one container do not affect others. This isolation is essential for maintaining the reliability and security of the application.

Portability

Containers are inherently portable, allowing you to develop and deploy applications consistently across different environments, from development to production. With containerization, you can package the application along with its dependencies into a standardized format, making it easy to move between on-premises infrastructure and cloud environments.

Speed

One of the key advantages of containerization is its speed. Containers can be spun up and torn down in seconds, enabling rapid deployment and scaling of applications. This agility is particularly beneficial in dynamic environments where workloads fluctuate frequently.

Resource Utilization

Virtualization vs containerization differs in terms of resource utilization. Virtual machines consume more resources due to the overhead of running multiple operating systems, whereas containers share resources more efficiently. This makes containerization a cost-effective solution for maximizing resource utilization in cloud environments.

Ecosystem

The containerization ecosystem, led by platforms like Docker and Kubernetes, provides a rich set of tools and services for building, deploying, and managing containerized applications. These tools streamline the container lifecycle, from development to production, and empower teams to embrace modern DevOps practices.

In conclusion, container virtualization offers a compelling alternative to traditional virtualization, with its focus on efficiency, isolation, portability, speed, resource utilization, and a vibrant ecosystem. By adopting containerization, organizations can unlock new possibilities in application deployment and management, paving the way for innovation and agility in the digital era.

Benefits and disadvantages Containerization?

Let's explore the benefits and disadvantages of containerization, comparing them to traditional virtualization:

Benefits of Containerization:

Efficiency

Containerization offers superior resource utilization compared to traditional virtualization. By sharing the host operating system's kernel, containers have minimal overhead, allowing for higher density and better efficiency in resource usage.

Portability

Containers are lightweight and portable, making them easy to move between different environments, from development to production. This portability enables developers to build applications once and run them anywhere, streamlining the deployment process and reducing time-to-market.

Isolation

While virtualization provides isolation through separate virtual machines, containerization offers similar isolation with less overhead. Each container operates independently, ensuring that changes or failures in one container do not affect others, thereby enhancing the reliability and security of applications.

Speed

Containers can be spun up and torn down in seconds, enabling rapid deployment and scaling of applications. This agility is particularly advantageous in dynamic environments where workloads fluctuate frequently, allowing for faster response times to changing demands.

DevOps Integration

Containerization aligns well with modern DevOps practices, facilitating collaboration between development and operations teams. Containers encapsulate the application and its dependencies, making it easier to build, test, and deploy software in a consistent and automated manner.

Ecosystem

The containerization ecosystem, led by platforms like Docker and Kubernetes, offers a rich set of tools and services for building, deploying, and managing containerized applications. These tools streamline the container lifecycle and empower organizations to embrace modern software development practices.

Disadvantages of Containerization:

Complex Networking

Container networking can be more complex compared to virtual machine networking, especially in multi-container or microservices architectures. Managing network communication between containers and external services requires careful planning and configuration.

Security Concerns

While containers provide isolation, they share the same kernel, which may introduce security vulnerabilities. Ensuring the security of containerized environments requires implementing proper access controls, vulnerability scanning, and security policies.

Limited Operating System Support

Containers rely on the host operating system's kernel, which limits compatibility with certain operating systems and applications. This can pose challenges when migrating legacy or monolithic applications to containerized environments.

Persistent Storage

Containers are designed to be ephemeral, meaning they are typically short-lived and stateless. Managing persistent data storage within containers requires additional consideration and may involve external storage solutions or volume mounts.

Learning Curve

Adopting containerization requires learning new tools, technologies, and best practices. Organizations may face challenges in upskilling their teams and transitioning from traditional virtualization to container-based architectures.

Resource Overhead

While containers have minimal overhead compared to virtual machines, there is still some overhead associated with containerization, particularly in terms of memory and CPU usage. Organizations need to monitor resource utilization and scale infrastructure accordingly to avoid performance bottlenecks.

In summary, containerization offers numerous benefits, including efficiency, portability, isolation, speed, DevOps integration, and a vibrant ecosystem. However, it also comes with challenges such as complex networking, security concerns, limited OS support, persistent storage management, learning curve, and resource overhead. By carefully evaluating these factors, organizations can make informed decisions about adopting containerization and leveraging its advantages for modern application deployment and management.

Application vs. system containers

let's dive into the world of application containers versus system containers:

Imagine you're navigating through a bustling city, each street filled with unique shops, restaurants, and attractions. In the realm of computing, this bustling city represents your software ecosystem, bustling with applications, services, and systems. Here, we encounter two distinct types of containers: application containers and system containers. Let's explore each and how they fit into the grand landscape of virtualization and containerization.

Application Containers

Application containers are like specialized boutiques within the city, each housing a specific application along with its dependencies and configurations. These containers encapsulate everything needed to run a particular application, from the code to the runtime environment, neatly packaged for portability and efficiency.

In the realm of virtualization vs containerization, application containers shine as lightweight, nimble solutions for deploying individual applications. Unlike traditional virtual machines, which require separate operating systems for each application, application containers leverage shared resources, making them more efficient and scalable.

Whether it's a web server, database, or microservice, application containers provide a consistent environment for running and managing diverse workloads, enabling developers to focus on building and deploying software without worrying about underlying infrastructure complexities.

System Containers

System containers, on the other hand, are like sturdy skyscrapers within the city, each housing an entire operating system along with its components and services. These containers provide a standardized environment for running system-level processes and services, such as system daemons, utilities, and networking stacks.

In the realm of virtualization vs containerization, system containers offer a more comprehensive alternative to traditional virtual machines. While virtual machines require separate operating systems, system containers share the host OS kernel, resulting in faster startup times and lower resource overhead.

System containers are ideal for tasks that require a consistent and isolated environment, such as running multiple instances of the same application or experimenting with different system configurations. They provide a convenient way to manage and deploy system-level software without the overhead of managing separate VMs.

In summary, application containers and system containers play complementary roles in the ever-evolving landscape of virtualization and containerization. Application containers offer lightweight, portable solutions for deploying individual applications, while system containers provide standardized environments for running system-level processes and services. Together, they form the building blocks of modern software ecosystems, empowering developers to innovate and iterate with ease in the bustling city of computing

How does Containerization relate to the cloud?

Imagine the cloud as a vast, boundless sky, where data flows freely like clouds drifting across the horizon. In this expansive realm of computing, containerization serves as a powerful catalyst, propelling applications and services to new heights. But how does containerization intersect with the cloud, and what role does it play in shaping the future of digital infrastructure?

Efficiency and Scalability

In the realm of virtualization vs containerization, containers excel in efficiency and scalability, making them well-suited for the dynamic nature of cloud environments. Containers can be spun up and torn down in seconds, enabling rapid deployment and scaling of applications to meet fluctuating demand. This agility is essential for modern cloud-native architectures, where responsiveness and flexibility are paramount.

Portability and Flexibility

Containers are inherently portable, allowing applications to run consistently across different cloud environments, from public clouds like AWS and Azure to private clouds and hybrid infrastructures. This portability enables organizations to adopt a multi-cloud strategy, leveraging the strengths of each cloud provider while avoiding vendor lock-in.

DevOps Integration

Containerization aligns seamlessly with DevOps practices, fostering collaboration between development and operations teams. With containers, developers can package applications and their dependencies into self-contained units, enabling consistent deployment across development, testing, and production environments. This standardized approach streamlines the software development lifecycle and accelerates time-to-market.

Resource Optimization

Containers optimize resource utilization in cloud environments by sharing resources more efficiently than virtual machines. With containers, multiple applications can run on the same host, maximizing resource usage and reducing costs. This resource optimization is particularly valuable in cloud environments where scalability and cost-effectiveness are key considerations.

Orchestration and Management

Container orchestration platforms like Kubernetes have become essential tools for managing containerized workloads in the cloud. These platforms automate tasks such as deployment, scaling, and load balancing, making it easier to manage complex containerized environments at scale. With container orchestration, organizations can achieve high availability, fault tolerance, and scalability in the cloud.

In summary, containerization is intricately linked to the cloud, offering efficiency, scalability, portability, flexibility, DevOps integration, resource optimization, and streamlined orchestration and management. As organizations embrace cloud-native architectures and digital transformation, containerization emerges as a foundational technology, enabling agility, innovation, and competitiveness in the ever-evolving landscape of cloud computing.

How is Containerization Related to Microservices?

In the fast-paced world of software development, where agility and scalability reign supreme, the relationship between containerization and microservices is nothing short of revolutionary. Imagine a bustling cityscape, where each building represents a component of your application. Now, let's delve into how containerization and microservices intertwine, reshaping the skyline of digital architecture.

Foundations of Infrastructure

Just as a city relies on a solid foundation to support its skyscrapers, modern software development hinges on the infrastructure that underpins it. Here, the choice between virtualization vs containerization sets the stage. Traditional virtualization erects separate buildings for each application, akin to standalone structures in a city. In contrast, containerization constructs modular, stackable containers, each housing a microservice. This approach mirrors the architectural elegance of a cityscape, where smaller, interconnected buildings coexist harmoniously.

Efficiency in Resource Allocation

Picture a city with limited space—efficient resource allocation is key. In the realm of virtualization vs containerization, containers emerge as the architects of efficiency. Like modular apartments within a building, containers share underlying resources, optimizing space and minimizing waste. This efficiency resonates with the microservices paradigm, where individual components operate independently, yet seamlessly integrate to deliver a cohesive experience.

Flexibility and Scalability

Just as a city must adapt to changing needs, software systems must evolve to meet user demands. Here, containerization and microservices shine. Containers provide the flexibility to scale individual microservices dynamically, much like adding new floors to a skyscraper. This agility empowers developers to respond swiftly to fluctuating traffic, ensuring optimal performance and user satisfaction.

Isolation and Resilience

In a bustling cityscape, each building must stand tall amidst the chaos. Similarly, in the realm of virtualization vs containerization, isolation is paramount. While traditional virtualization erects sturdy walls between applications, containerization ensures robust isolation between microservices. This resilience prevents failures in one microservice from cascading across the entire system, maintaining stability in the face of adversity.

Streamlined Development Pipelines

Imagine a city where construction projects seamlessly progress from blueprint to skyscraper. Similarly, containerization and microservices streamline the software development lifecycle. Containers encapsulate microservices and their dependencies, enabling consistent deployment across diverse environments. This standardization accelerates development pipelines, fostering collaboration and innovation at every stage.

Harmony in DevOps Practices

Finally, like the harmonious coexistence of city planners and architects, containerization and microservices align with DevOps principles. DevOps bridges the gap between development and operations, much like the infrastructure that supports a vibrant cityscape. Containerization facilitates continuous integration and deployment, while microservices enable teams to iterate independently, fostering a culture of innovation and collaboration.

In essence, the relationship between containerization and microservices parallels the dynamic interplay of architecture and urban planning. Together, they form the foundation of modern software ecosystems, enabling organizations to build scalable, resilient, and adaptable systems that thrive in the ever-evolving landscape of technology.

What is a virtual machine?

Imagine you're an architect tasked with designing a futuristic cityscape, where flexibility and efficiency are paramount. In this digital metropolis, a virtual machine (VM) serves as the cornerstone of your infrastructure, reshaping the skyline of computing. But what exactly is a virtual machine, and how does it compare to its innovative counterpart, containerization.

Isolated Environments

Picture virtual machines as towering skyscrapers, each housing a self-contained ecosystem of resources. Unlike containerization, which shares the host operating system's kernel, virtual machines operate independently, with their own virtualized hardware and operating systems. This isolation ensures that changes or failures in one virtual machine do not impact others, fostering stability and security.

Resource Allocation

In the dynamic landscape of computing, efficient resource allocation is essential. Here, virtualization vs containerization diverge in their approaches. Virtual machines consume more resources due to the overhead of running multiple operating systems. Each virtual machine requires its own allocation of CPU, memory, and storage, akin to individual skyscrapers with their own infrastructure.

Versatility and Compatibility

Just as a city accommodates diverse architectural styles, virtual machines support a wide range of operating systems and applications. This versatility makes virtualization a popular choice for organizations with heterogeneous environments, allowing them to run legacy applications alongside modern software without compatibility issues.

Migration and Mobility

Picture virtual machines as modular skyscrapers that can be transported seamlessly across different locations. With virtualization, you can migrate virtual machines between physical hosts with ease, ensuring continuity of service and minimizing downtime. This mobility facilitates disaster recovery, load balancing, and resource optimization in dynamic computing environments.

Management and Administration

In the bustling cityscape of computing, effective management is essential to ensure smooth operation. Virtualization platforms provide centralized management tools for provisioning, monitoring, and securing virtual machines. This streamlined administration simplifies tasks such as patch management, resource allocation, and capacity planning, empowering organizations to maintain control over their digital infrastructure.

In summary, a virtual machine is a cornerstone of virtualization technology, providing isolated environments for running multiple operating systems and applications on a single physical machine. While virtualization offers versatility, isolation, and mobility, it comes with higher resource overhead compared to its innovative counterpart, containerization. As organizations navigate the evolving landscape of computing, understanding the strengths and limitations of virtualization vs containerization is essential for building resilient and scalable digital ecosystems.

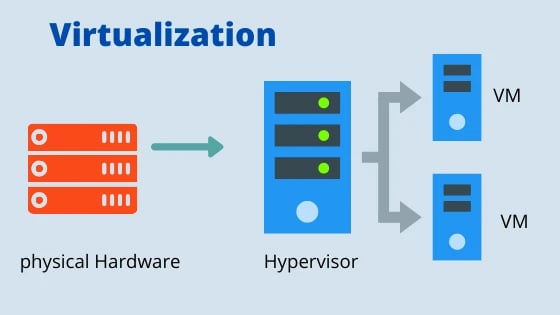

What Is Virtualization?

Imagine you're an urban planner tasked with designing a city that seamlessly accommodates diverse lifestyles and needs. In the world of computing, virtualization serves as the architect of such digital landscapes, reshaping the infrastructure to optimize efficiency and flexibility. But what exactly is virtualization, and how does it differ from its innovative sibling, containerization?

Foundation of Digital Architecture

Virtualization is the cornerstone of modern computing, akin to the blueprint that guides the construction of a city. In the realm of virtualization vs containerization, virtualization creates virtual instances of hardware, allowing multiple operating systems and applications to coexist on a single physical machine.You can refer to What is virtualization to learn about virtualization

Isolated Environments

Picture virtualization vs containerization as a series of skyscrapers, each housing its own ecosystem of resources. Unlike containerization, which shares the host operating system's kernel, virtual machines operate independently with their own virtualized hardware and operating systems. This isolation ensures that changes or failures in one virtual machine do not impact others, fostering stability and security.You can refer to Comparing VMware and Proxmox to compare VMware and Proxmox.

Resource Allocation

In the dynamic landscape of computing, efficient resource allocation is essential. Here, virtualization vs containerization diverge in their approaches. Virtual machines consume more resources due to the overhead of running multiple operating systems. Each virtual machine requires its own allocation of CPU, memory, and storage, akin to individual skyscrapers with their own infrastructure.

Versatility and Compatibility

Just as a city accommodates diverse architectural styles, virtual machines support a wide range of operating systems and applications. This versatility makes virtualization a popular choice for organizations with heterogeneous environments, allowing them to run legacy applications alongside modern software without compatibility issues.

Migration and Mobility

Picture virtualization as modular skyscrapers that can be transported seamlessly across different locations. With virtualization, you can migrate virtual machines between physical hosts with ease, ensuring continuity of service and minimizing downtime. This mobility facilitates disaster recovery, load balancing, and resource optimization in dynamic computing environments.To learn about the best Virtual Machine Software of 2023, you can refer to the article Best Virtual Machine Software of 2023

Management and Administration

In the bustling cityscape of computing, effective management is essential to ensure smooth operation. Virtualization platforms provide centralized management tools for provisioning, monitoring, and securing virtual machines. This streamlined administration simplifies tasks such as patch management, resource allocation, and capacity planning, empowering organizations to maintain control over their digital infrastructure.

In summary, virtualization vs containerization is the foundation of modern computing, providing isolated environments for running multiple operating systems and applications on a single physical machine. While virtualization offers versatility, isolation, and mobility, it comes with higher resource overhead compared to its innovative counterpart, containerization. As organizations navigate the evolving landscape of computing, understanding the strengths and limitations of virtualization vs containerization is essential for building resilient and scalable digital ecosystems.

What is Hypervisors?

Imagine you're the conductor of a grand symphony, orchestrating a harmonious blend of instruments to create a masterpiece. In the world of computing, hypervisors serve as the maestros of virtualization, conducting a symphony of virtual machines to optimize resource utilization and flexibility. But what exactly is a hypervisor, and how does it compare to its counterpart, containerization?

Conductor of Virtualization

A hypervisor is like the conductor of a virtual orchestra, overseeing the creation and management of virtual machines (VMs). In the realm of virtualization vs containerization, hypervisors emulate physical hardware, enabling multiple operating systems and applications to run concurrently on a single physical machine.

Isolation and Segregation

Picture a hypervisor as the stage upon which each virtual machine performs its own unique melody. Unlike containerization, which shares the host operating system's kernel, virtual machines operate independently, with their own virtualized hardware and operating systems. This isolation ensures that changes or failures in one virtual machine do not affect others, maintaining stability and security.

Resource Allocation

In the symphony of computing, efficient resource allocation is key to achieving harmony. Here, virtualization vs containerization diverge in their approaches. Hypervisors allocate resources to each virtual machine, much like assigning seats in a concert hall. Each VM receives its own allocation of CPU, memory, and storage, ensuring fair distribution and optimal performance.

Versatility and Compatibility

Just as a conductor blends diverse musical instruments into a cohesive symphony, hypervisors support a wide range of operating systems and applications. This versatility makes virtualization a popular choice for organizations with heterogeneous environments, allowing them to accommodate legacy systems alongside modern software without compatibility issues.

Migration and Mobility

Picture a hypervisor as a conductor's baton, guiding the seamless movement of virtual machines across different physical hosts. With virtualization vs containerization, you can migrate VMs between servers with ease, ensuring continuity of service and minimizing disruption. This mobility facilitates disaster recovery, load balancing, and resource optimization in dynamic computing environments.

Management and Administration

In the grand performance of computing, effective management is essential to ensure smooth operation. Hypervisor management platforms provide centralized tools for provisioning, monitoring, and securing virtual machines. This streamlined administration simplifies tasks such as patch management, resource allocation, and capacity planning, empowering organizations to maintain control over their virtual infrastructure.

In summary, hypervisors are the conductors of virtualization vs containerization, orchestrating a symphony of virtual machines to achieve efficient resource utilization and flexibility. While virtualization offers versatility, isolation, and mobility, it comes with higher resource overhead compared to its innovative counterpart, containerization. As organizations navigate the dynamic landscape of computing, understanding the role of hypervisors vs containerization is essential for building resilient and scalable digital ecosystems.

Bare Metal Hypervisor

In the vast landscape of digital infrastructure, where efficiency and performance reign supreme, the Bare Metal Hypervisor emerges as the stalwart guardian of computing excellence. Imagine it as the conductor of a grand orchestra, wielding its baton to orchestrate a symphony of virtual machines on raw hardware. But what exactly is the Bare Metal Hypervisor, and how does it differentiate itself in the epic battle between virtualization vs containerization?

Master of Raw Power

The Bare Metal Hypervisor stands as a colossus upon the raw hardware, unfettered by the constraints of a host operating system. In the eternal struggle of virtualization vs containerization, this mighty entity blazes its path, carving out a realm of unparalleled performance and efficiency.

Embrace of Isolation

Picture the Bare Metal Hypervisor as the guardian of each virtual machine, providing a fortress of isolation and security. Unlike containerization, which shares the host operating system's kernel, virtual machines under the Bare Metal Hypervisor operate independently, ensuring uncompromising stability and resilience.

Optimization of Resources

In the eternal battle for resource supremacy, the Bare Metal Hypervisor reigns supreme. While containerization offers agility and flexibility, the Bare Metal Hypervisor optimizes resource allocation at the hardware level, ensuring each virtual machine receives its fair share of CPU, memory, and storage.

Versatility Unleashed

Just as a maestro commands a diverse ensemble of instruments, the Bare Metal Hypervisor supports a vast array of operating systems and applications. This versatility makes it an indispensable tool for organizations with diverse needs, allowing them to run legacy systems alongside modern software without compromise.

Mobility Across Realms

Like a cosmic traveler traversing distant galaxies, the Bare Metal Hypervisor enables virtual machines to transcend physical boundaries. With seamless migration capabilities, VMs under the Bare Metal Hypervisor can journey across different hardware platforms, ensuring continuity of service and resilience in the face of adversity.

Management and Mastery

In the grand tapestry of digital orchestration, effective management is the key to a flawless performance. The Bare Metal Hypervisor provides a suite of powerful tools for provisioning, monitoring, and securing virtual machines. This mastery over administration simplifies tasks such as patch management, resource allocation, and capacity planning, ensuring the symphony of virtualization plays harmoniously.

In conclusion, the Bare Metal Hypervisor stands as a titan in the realm of virtualization, wielding unparalleled performance, isolation, and versatility. While containerization offers agility and efficiency, the Bare Metal Hypervisor reigns supreme, carving its legacy upon the raw hardware of computing. As organizations navigate the ever-evolving landscape of digital infrastructure, understanding the power of the Bare Metal Hypervisor vs containerization is essential for unlocking the true potential of their digital endeavors.

How Does Virtualization Work?

Imagine you're a skilled magician on a grand stage, adept at creating illusions that captivate and astound your audience. In the realm of computing, virtualization operates much like your magical prowess, conjuring up virtual environments that defy the limitations of physical hardware. But how does virtualization work, and how does it differ from its contemporary counterpart, containerization?

The Illusion of Reality

Virtualization is akin to crafting an illusion of reality, where virtual machines (VMs) appear as independent entities despite sharing underlying hardware. In the age-old debate of virtualization vs containerization, virtualization blurs the lines between the physical and digital realms, enabling multiple operating systems and applications to coexist harmoniously on a single physical machine.

Creation of Virtual Layers

Picture virtualization as the art of creating layers upon layers of virtual environments, much like stacking transparent sheets to form intricate patterns. Each virtual machine operates within its own encapsulated space, isolated from others yet interconnected through the underlying hypervisor.

Harnessing the Power of Hypervisors

At the heart of virtualization lies the hypervisor, a masterful conductor orchestrating the symphony of virtual machines. Unlike containerization, which relies on shared resources, virtualization utilizes hypervisors to emulate physical hardware and manage the allocation of CPU, memory, and storage resources to each VM.

Efficient Resource Utilization

In the grand spectacle of computing, efficient resource utilization is paramount. While containerization offers agility and scalability, virtualization optimizes resource allocation at the hardware level, ensuring each virtual machine receives its fair share of resources without compromising performance.

Isolation and Security

Like a magician's protective barrier, virtualization provides a fortress of isolation and security for each virtual machine. Unlike containerization, where containers share the host operating system's kernel, virtual machines operate independently, minimizing the risk of interference and ensuring data integrity.

Flexibility and Versatility

Just as a magician can transform one illusion into another with a wave of the hand, virtualization offers unparalleled flexibility and versatility. With virtual machines, organizations can run a diverse array of operating systems and applications, accommodating legacy systems alongside modern software without compromise.

In summary, virtualization vs containerization is a masterful illusionist, weaving together virtual environments that transcend the limitations of physical hardware. While containerization offers agility and efficiency, virtualization harnesses the power of hypervisors to create isolated, secure, and versatile virtual machines. As organizations navigate the ever-evolving landscape of computing, understanding the magic of virtualization vs containerization is essential for unlocking the true potential of their digital endeavors.

Virtualization Software Components

Welcome to the virtual playground of computing, where the magic of virtualization software components transforms the ordinary into the extraordinary. Picture a bustling cityscape of digital innovation, where skyscrapers of efficiency and flexibility rise high above the horizon. But what exactly are these virtualization software components, and how do they shape the landscape in the eternal dance of virtualization vs containerization?

Hypervisor: The Maestro of Virtualization

At the heart of virtualization lies the hypervisor, a masterful conductor orchestrating the symphony of virtual machines (VMs). Unlike containerization, which shares the host operating system's kernel, the hypervisor creates isolated environments, allowing multiple VMs to coexist on a single physical machine, each with its own virtualized hardware and operating system.

Virtual Machines: The Building Blocks of Virtualization

Picture virtual machines as towering skyscrapers, each housing a self-contained ecosystem of resources and applications. Unlike containers, which share resources with the host and other containers, virtual machines operate independently, providing a level of isolation and security that is essential for enterprise-grade applications.

Resource Pooling and Allocation: Optimizing Efficiency

In the dynamic landscape of computing, efficient resource utilization is key to achieving optimal performance. While containerization offers agility and scalability, virtualization software components optimize resource allocation at the hardware level, ensuring each VM receives its fair share of CPU, memory, and storage resources without compromising performance.

Management and Orchestration: The Art of Control

Just as a city requires careful planning and management, virtualization software components provide powerful tools for provisioning, monitoring, and securing virtual machines. This centralized control simplifies tasks such as patch management, resource allocation, and capacity planning, empowering organizations to maintain control over their virtual infrastructure.

Migration and Mobility: Seamless Transitions Across Environments

Like travelers crossing borders without impediment, virtual machines under the guidance of virtualization software components can migrate seamlessly between different physical hosts. This mobility ensures continuity of service and resilience in the face of adversity, facilitating disaster recovery, load balancing, and resource optimization in dynamic computing environments.

Versatility and Compatibility: Embracing Diversity

In the vibrant tapestry of digital innovation, virtualization software components support a wide range of operating systems and applications. This versatility makes them indispensable tools for organizations with heterogeneous environments, allowing them to run legacy systems alongside modern software without compatibility issues.

In summary, virtualization software components are the architects of efficiency and flexibility in the realm of computing. While containerization offers agility and scalability, virtualization harnesses the power of hypervisors to create isolated, secure, and versatile virtual machines. As organizations navigate the ever-evolving landscape of technology, understanding the intricacies of virtualization vs containerization is essential for unlocking the true potential of their digital endeavors.

Benefits and disadvantages of Virtualization

Let's explore the benefits and disadvantages of virtualization:

Benefits:

Efficient Resource Utilization

Virtualization optimizes resource utilization by allowing multiple virtual machines (VMs) to run on a single physical server. Unlike traditional server setups, where each application requires its own dedicated hardware, virtualization enables better use of computing resources, reducing hardware costs and energy consumption.

Flexibility and Scalability

Virtualization offers unparalleled flexibility and scalability, allowing organizations to quickly provision and deploy new VMs to meet changing workload demands. Whether scaling up to handle increased traffic or scaling down to conserve resources during periods of low activity, virtualization provides agility to adapt to evolving business needs.

Isolation and Security

With virtualization, each VM operates in its isolated environment, providing a layer of security and preventing applications from interfering with each other. This isolation enhances data security and reduces the risk of malware infections or unauthorized access, making virtualization an ideal choice for hosting multiple applications with varying security requirements.

Disaster Recovery and High Availability

Virtualization simplifies disaster recovery planning by allowing VMs to be easily backed up, replicated, and restored in case of hardware failures or disasters. Additionally, virtualization software often includes features for live migration and failover, ensuring high availability and minimizing downtime during maintenance or hardware upgrades.

Disadvantages:

Resource Overhead

While virtualization offers efficiency in resource utilization, it also introduces some overhead. The hypervisor, which manages virtual machines, consumes additional CPU and memory resources, which can impact overall system performance. In contrast, containerization imposes minimal overhead since containers share the host operating system's kernel.

Complexity and Management Overhead

Managing a virtualized environment can be complex and requires specialized skills. IT administrators must oversee tasks such as provisioning, monitoring, and maintaining virtual machines, as well as managing storage, networking, and security configurations. Containerization, with its simpler architecture and management model, may be more straightforward for some use cases.

Performance Variability

Virtualization introduces performance variability due to the "noisy neighbor" effect, where resource-intensive VMs on the same physical host can impact the performance of other VMs. While resource allocation techniques can mitigate this issue, it remains a concern for applications with strict performance requirements. Containerization, with its lightweight and isolated nature, may offer more consistent performance in such scenarios.

Vendor Lock-In

Adopting virtualization often involves investing in proprietary virtualization software from vendors like VMware or Microsoft. While these solutions offer robust features and support, they can also lead to vendor lock-in, limiting flexibility and increasing dependency on specific technology stacks. Containerization, with its open-source ecosystem and standardized interfaces, offers more freedom of choice and portability across different platforms.

In summary, virtualization offers numerous benefits, including efficient resource utilization, flexibility, scalability, isolation, security, and disaster recovery capabilities. However, it also comes with disadvantages such as resource overhead, complexity, performance variability, and vendor lock-in. Organizations must carefully weigh these factors and consider alternative approaches like containerization when determining the best fit for their specific use cases and requirements.

How does Virtualization relate to the cloud?

In the ethereal realm of cloud computing, where data flows like streams of consciousness, virtualization serves as the architect of digital landscapes, shaping the very fabric of the cloud. But how does virtualization relate to this celestial domain, and how does it stand against its innovative counterpart, containerization?

Foundation of Cloud Infrastructure

Virtualization lays the groundwork for the cloud, much like the bedrock upon which a magnificent castle is built. In the eternal duel of virtualization vs containerization, virtualization pioneers the cloud's infrastructure by creating virtual instances of hardware, allowing multiple virtual machines (VMs) to coexist on a single physical server.

Efficient Resource Utilization

Within the cloud's vast expanse, efficient resource utilization is paramount. Virtualization optimizes resource allocation by enabling dynamic provisioning and scaling of VMs based on demand. Unlike containerization, which shares the host operating system's kernel, virtualization offers isolation and independence for each VM, ensuring optimal performance and security.

Versatility Across Environments

Like a chameleon adapting to its surroundings, virtualization software components support a diverse array of operating systems and applications within the cloud. This versatility makes virtualization an indispensable tool for organizations with heterogeneous environments, enabling them to seamlessly migrate workloads across different cloud providers or deploy hybrid cloud solutions.

Scalability and Agility

In the ever-evolving landscape of cloud computing, scalability and agility are the currency of innovation. Virtualization empowers organizations to scale their infrastructure dynamically, spinning up new VMs or resizing existing ones to meet fluctuating demand. This agility enables rapid deployment of applications and services, fostering innovation and competitiveness in the cloud.

Management and Orchestration

Just as a conductor leads a symphony orchestra, virtualization platforms provide centralized management and orchestration tools for provisioning, monitoring, and securing VMs within the cloud. This orchestration simplifies complex tasks such as load balancing, disaster recovery, and resource optimization, ensuring smooth operation and resilience in the face of challenges.

Migration and Portability

Like travelers journeying across distant lands, VMs under the guidance of virtualization can migrate seamlessly between different cloud environments. Whether moving workloads between public clouds, private clouds, or on-premises data centers, virtualization facilitates portability and flexibility, enabling organizations to adapt to changing business needs and infrastructure requirements.

In summary, virtualization is deeply intertwined with the cloud, serving as the foundation upon which the digital realm is built. While containerization offers agility and efficiency, virtualization provides robustness, versatility, and scalability essential for cloud computing. As organizations navigate the celestial expanse of the cloud, understanding the role of virtualization vs containerization is essential for unlocking the full potential of their digital endeavors.

How Hypervisors are Used for Virtualization

In the bustling landscape of digital architecture, where efficiency and versatility reign supreme, hypervisors emerge as the architects of virtualization, shaping the very fabric of computing landscapes. But how are hypervisors utilized for virtualization, and how do they stand against the rising tide of containerization?

The Maestros of Virtualization

Hypervisors stand as the maestros of virtualization, conducting a symphony of virtual machines (VMs) on physical hardware. In the eternal dance of virtualization vs containerization, hypervisors orchestrate the creation and management of VMs, providing the foundation for efficient resource utilization and isolation.

Emulating Hardware

Unlike containerization, which shares the host operating system's kernel, hypervisors emulate physical hardware to create isolated environments for VMs. Each VM operates as if it were running on dedicated hardware, complete with its own virtualized CPU, memory, storage, and networking interfaces.

Isolation and Security

With hypervisors, each VM operates in its isolated sandbox, ensuring that applications running within one VM cannot interfere with those in another. This isolation provides a layer of security and prevents potential vulnerabilities from spreading across the system, making virtualization a preferred choice for hosting multi-tenant environments and sensitive workloads.

Resource Allocation and Optimization

Hypervisors optimize resource allocation by dynamically allocating CPU, memory, and storage resources to each VM based on demand. Unlike containerization, which shares resources with the host and other containers, virtual machines under hypervisors have their dedicated resource pools, ensuring fair distribution and optimal performance.

Versatility Across Environments

Just as a conductor leads an orchestra through diverse musical compositions, hypervisors support a wide range of operating systems and applications within virtualized environments. This versatility makes virtualization an ideal solution for organizations with heterogeneous infrastructures, allowing them to run legacy systems alongside modern applications without compatibility issues.

Migration and Mobility

Like nomads traversing vast landscapes, VMs under hypervisor control can migrate seamlessly between different physical hosts or cloud environments. This mobility enables organizations to achieve high availability, disaster recovery, and workload balancing, ensuring continuity of service and resilience in the face of hardware failures or maintenance events.

In summary, hypervisors serve as the backbone of virtualization, providing the infrastructure and management tools necessary to create and manage virtual machines. While containerization offers agility and efficiency, virtualization vs containerization with hypervisors offers robustness, versatility, and security essential for modern computing environments. As organizations navigate the ever-evolving landscape of technology, understanding the role of hypervisors vs containerization is essential for unlocking the full potential of their digital endeavors

.

Containerization vs. Virtualization: Key Differences

In the realm of digital architecture, where efficiency and flexibility are paramount, the debate between virtualization vs containerization rages on like a timeless saga. These two titans of technology offer distinct approaches to deploying and managing applications, each with its strengths and weaknesses. Let's delve into the key differences between containerization and virtualization, unraveling their mysteries in the digital landscape.

Foundation of Deployment

Virtualization builds its empire upon the emulation of physical hardware, using hypervisors to create isolated virtual machines (VMs) that run multiple operating systems and applications. Containerization, on the other hand, operates at a higher level, leveraging lightweight containers to encapsulate applications and their dependencies within a shared operating system kernel.

Resource Utilization

Virtualization allocates dedicated resources to each VM, including CPU, memory, and storage, resulting in a higher resource overhead compared to containerization. Containers, however, share the host operating system's kernel and resources, allowing for more efficient resource utilization and faster startup times.

Isolation and Security

Virtualization offers stronger isolation between VMs, as each VM operates independently with its own kernel and filesystem. This isolation provides a higher level of security but comes with greater resource overhead. In contrast, containers share the host operating system's kernel, which may pose security risks if not properly configured but offers lighter-weight isolation and faster performance.

Portability and Dependency Management

Containers excel in portability and dependency management, as they encapsulate applications and their dependencies into a single package. This allows for consistent deployment across different environments, making containers an ideal choice for microservices architectures and DevOps practices. Virtual machines, while portable, require more overhead and complexity for dependency management and deployment.

Scalability and Agility

Containerization offers greater scalability and agility compared to virtualization, as containers can be spun up and down quickly to meet changing demand. With container orchestration platforms like Kubernetes, organizations can automate deployment, scaling, and management of containerized applications, enabling rapid development and deployment cycles.

Ecosystem and Tooling

The containerization ecosystem has experienced rapid growth in recent years, with a plethora of tools and platforms available to streamline container management and orchestration. Virtualization, while mature and widely adopted, may lack the same level of innovation and ecosystem support found in the containerization space.

In summary, while both virtualization vs containerization offer valuable solutions for deploying and managing applications, they differ in their approach to resource utilization, isolation, portability, scalability, and ecosystem support. Understanding the key differences between containerization vs virtualization is essential for choosing the right technology to meet the specific needs and requirements of your digital projects.

Traditional vs virtualization vs containerization

In the ever-evolving landscape of digital infrastructure, the debate between traditional, virtualization vs containerization echoes like a timeless saga, each offering distinct approaches to managing and deploying applications. Let's embark on a journey through these paradigms, unraveling their mysteries and uncovering their unique characteristics.

Traditional Computing

In the annals of computing history, traditional infrastructure stands as the bedrock upon which the digital revolution was built. In this era, applications run directly on physical servers, each dedicated to a specific workload. While this approach offers simplicity and familiarity, it often leads to underutilization of resources and inefficiencies, as each server operates independently, unable to adapt to changing demands.

Virtualization

Enter virtualization, the revolutionary force that reshapes the very fabric of computing. With virtualization, the hardware layer is abstracted, allowing multiple virtual machines (VMs) to run on a single physical server. Each VM operates as if it were running on dedicated hardware, complete with its own operating system and applications. This approach offers greater flexibility, resource utilization, and scalability compared to traditional computing, as VMs can be provisioned, migrated, and scaled dynamically to meet changing workload demands.

Containerization

Now, behold the rising star of containerization, a paradigm-shifting force that challenges the status quo. Unlike virtualization, which abstracts the hardware layer, containerization abstracts the operating system layer, encapsulating applications and their dependencies into lightweight, portable containers. These containers share the host operating system's kernel, resulting in faster startup times, lower resource overhead, and greater consistency across different environments. With containerization, developers can package their applications once and run them anywhere, making it an ideal choice for modern microservices architectures and DevOps practices.

Differences and Advantages

In the eternal battle between virtualization vs containerization, each approach offers unique advantages and trade-offs. Virtualization provides strong isolation between VMs, making it suitable for multi-tenant environments and legacy applications with strict security requirements. On the other hand, containerization excels in portability, agility, and resource efficiency, making it ideal for cloud-native applications and modern development workflows.

The Future of Computing

As we gaze into the horizon of digital innovation, one thing is clear: the landscape of computing will continue to evolve, driven by advances in virtualization, containerization, and beyond. Whether you choose traditional computing, virtualization, or containerization, understanding the strengths and limitations of each paradigm is essential for navigating the ever-changing terrain of technology and unlocking the full potential of your digital endeavors.

Hardware virtualization vs containerization

In the realm of digital architecture, where innovation thrives and efficiency reigns supreme, the clash between hardware virtualization and containerization emerges as a pivotal battle in the quest for optimal resource utilization and flexibility. Let's embark on a journey through these two paradigms, unraveling their mysteries and uncovering their unique characteristics.

Hardware Virtualization

At the heart of hardware virtualization lies the concept of abstraction, where the physical hardware layer is decoupled from the operating system and applications. In this realm, hypervisors serve as the gatekeepers, orchestrating the creation and management of virtual machines (VMs). Each VM operates as if it were running on dedicated hardware, complete with its own virtualized CPU, memory, storage, and networking interfaces. This approach offers strong isolation between VMs, making it ideal for multi-tenant environments and legacy applications with stringent security requirements.

Containerization

Enter containerization, a disruptive force that challenges the traditional boundaries of computing. Unlike hardware virtualization, which abstracts the hardware layer, containerization abstracts the operating system layer, encapsulating applications and their dependencies into lightweight, portable containers. These containers share the host operating system's kernel, resulting in faster startup times, lower resource overhead, and greater consistency across different environments. With containerization, developers can package their applications once and run them anywhere, making it an ideal choice for modern microservices architectures and DevOps practices.

Differences and Advantages

In the eternal clash between hardware virtualization vs containerization, each approach offers unique advantages and trade-offs. Hardware virtualization provides stronger isolation between VMs, making it suitable for environments with strict security requirements or legacy applications that cannot be containerized easily. However, it comes with higher resource overhead and slower startup times compared to containerization. On the other hand, containerization excels in portability, agility, and resource efficiency, making it ideal for cloud-native applications and modern development workflows.

Navigating the Digital Landscape

As organizations navigate the ever-evolving terrain of technology, understanding the strengths and limitations of hardware virtualization vs containerization is essential for making informed decisions about infrastructure deployment and application architecture. Whether you choose hardware virtualization or containerization, the goal remains the same: to harness the power of digital innovation to drive efficiency, agility, and innovation in your organization.

Virtualization vs containerization docker

In the dynamic realm of digital transformation, the clash between virtualization vs containerization epitomized by the rising star Docker, represents a defining moment in the evolution of computing paradigms. Let's embark on a journey through these two transformative forcesvirtualization vs containerization, unraveling their nuances and exploring the innovative landscape they shape.

Virtualization

Virtualization, the venerable titan of computing, pioneers the abstraction of hardware, enabling multiple virtual machines (VMs) to coexist on a single physical server. With the aid of hypervisors, each VM operates independently, complete with its own virtualized CPU, memory, storage, and networking interfaces. This approach offers robust isolation between VMs, making it ideal for hosting diverse workloads in multi-tenant environments.

Containerization

Enter containerization, the disruptive force that challenges traditional boundaries and redefines the way applications are deployed and managed. Unlike virtualization, which abstracts the hardware layer, containerization abstracts the operating system layer, encapsulating applications and their dependencies into lightweight, portable containers. These containers share the host operating system's kernel, resulting in faster startup times, lower resource overhead, and greater consistency across different environments.

Docker

At the forefront of the containerization revolution stands Docker, a trailblazer that simplifies the creation, deployment, and management of containerized applications. With Docker, developers can package their applications and dependencies into standardized Docker images, which can then be deployed across any platform that supports Docker. This portability and consistency make Docker an indispensable tool for modern development workflows, enabling rapid iteration and deployment of cloud-native applications.

Differences and Advantages

In the eternal clash between virtualization vs containerization, each approach offers unique advantages and trade-offs. Virtualization provides strong isolation between VMs, making it suitable for environments with stringent security requirements or legacy applications that cannot be containerized easily. However, it comes with higher resource overhead and slower startup times compared to containerization. On the other hand, containerization excels in portability, agility, and resource efficiency, making it ideal for cloud-native applications and modern development practices.

virtualization vs containerization in cloud computing

In the ethereal realm of cloud computing, where data dances among the clouds and innovation knows no bounds, the age-old debate between virtualization vs containerization casts a captivating shadow over the digital landscape. Let's embark on a journey through the clouds, unraveling the nuances of virtualization vs containerization and exploring their profound impact on the realm of cloud computing.

Virtualization

Virtualization, the venerable titan of computing, serves as the cornerstone of cloud infrastructure, paving the way for efficient resource utilization and dynamic scalability. With the aid of hypervisors, virtualization abstracts physical hardware, allowing multiple virtual machines (VMs) to coexist on a single physical server. Each VM operates independently, complete with its own virtualized CPU, memory, storage, and networking interfaces. This approach offers robust isolation between VMs, making it ideal for hosting diverse workloads in multi-tenant cloud environments.

Containerization

Enter containerization, the revolutionary force that challenges traditional boundaries and redefines the way applications are deployed and managed in the cloud. Unlike virtualization, which abstracts the hardware layer, containerization abstracts the operating system layer, encapsulating applications and their dependencies into lightweight, portable containers. These containers share the host operating system's kernel, resulting in faster startup times, lower resource overhead, and greater consistency across different cloud environments.

Differences and Advantages

In the celestial clash between virtualization vs containerization in cloud computing, each approach offers unique advantages and trade-offs. Virtualization provides strong isolation between VMs, making it suitable for environments with stringent security requirements or legacy applications that cannot be containerized easily. However, it comes with higher resource overhead and slower startup times compared to containerization. On the other hand, containerization excels in portability, agility, and resource efficiency, making it ideal for cloud-native applications and modern development practices.

Navigating the Cloudscape

As organizations navigate the boundless expanse of cloud computing, understanding the strengths and limitations of virtualization vs containerization is essential for making informed decisions about infrastructure deployment and application architecture. Whether you choose virtualization vs containerization, the goal remains the same: to harness the power of the cloud to drive efficiency, agility, and innovation in your organization, ensuring that your digital endeavors soar to new heights among the clouds.

Container vs vm security

In the realm of cybersecurity, where the battle between safeguarding data and enabling innovation rages on, the clash between virtualization vs containerization manifests as a pivotal point of contention. Let's embark on a journey through the digital fortress, exploring the nuances of virtualization vs containerization security and navigating the intricacies of virtualization vs containerization.

Virtual Machines (VMs)

In the ancient halls of virtualization, VMs stand as the stalwart guardians of data integrity and confidentiality. With the aid of hypervisors, VMs abstract physical hardware, creating isolated environments where applications and operating systems run independently. This strong isolation provides a robust defense against external threats, making VMs well-suited for environments with stringent security requirements.

Containers

Enter containers, the agile sentinels of modern computing, offering a lightweight and portable approach to application deployment. Unlike VMs, which encapsulate entire operating systems, containers share the host operating system's kernel, resulting in lower resource overhead and faster startup times. While containers offer agility and scalability, their shared kernel introduces potential security risks, as a vulnerability in one container could potentially impact others running on the same host.

Security Considerations

In the eternal duel of container vs VM security, each approach offers unique advantages and challenges. VMs provide strong isolation between applications, making them suitable for multi-tenant environments and legacy applications with strict security requirements. However, VMs come with higher resource overhead and slower startup times compared to containers. Containers, on the other hand, offer lightweight and fast deployment, making them ideal for cloud-native applications and DevOps workflows. However, their shared kernel introduces potential security risks, requiring careful configuration and monitoring to mitigate.

Defense Strategies:

To bolster security in containerized environments, organizations must implement a multi-layered defense strategy. This includes hardening the host operating system, securing container images, implementing access controls, and monitoring container runtime behavior. Additionally, organizations can leverage tools such as container security platforms, vulnerability scanners, and runtime protection mechanisms to detect and mitigate threats in real-time.

Navigating the Security Landscape

As organizations navigate the complex terrain of cybersecurity, understanding the nuances of container vs VM security is essential for building resilient and secure infrastructure. Whether you choose virtualization or containerization, the goal remains the same: to protect sensitive data, safeguard critical assets, and mitigate the ever-evolving threat landscape. With a proactive approach to security and a deep understanding of virtualization vs containerization, organizations can fortify their defenses and ensure the integrity and confidentiality of their digital assets.

Containerization vs microservices